The construction is currently experiencing an avalanche of data growth due to the increase in the number of systems and tools, which in turn leads to the need for more specialists dealing with repetitive processing of the same processes and quality control of the same data.

Faced with stagnating KPIs, despite the growing amount of data and the number of specialists in the company, management and administration come to the inevitable realization of the need for process automation. This realization becomes an incentive to start complex automation, the main goal of which is to reduce the complexity of business processes and reduce the influence of the human factor.

Process simplification is the formation of an automated process that replaces traditional manual control functions.

Data analysis helps companies recognize the need to automate processes

The issue of automation or more correctly - "minimizing the role of humans in data processing processes" is irreversible and is extremely sensitive for every company, as specialists in any field often hold back from fully disclosing their methods and subtleties of work to their colleagues in automation, realizing the risk of losing their jobs in an environment of rapidly evolving technologies.

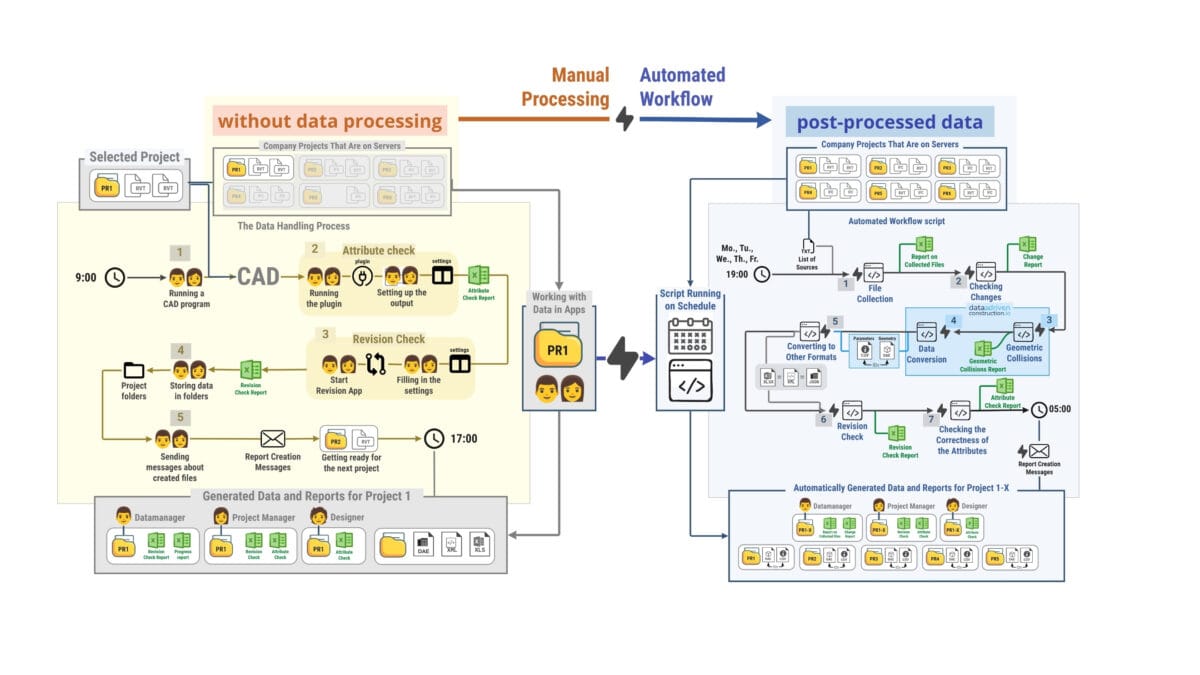

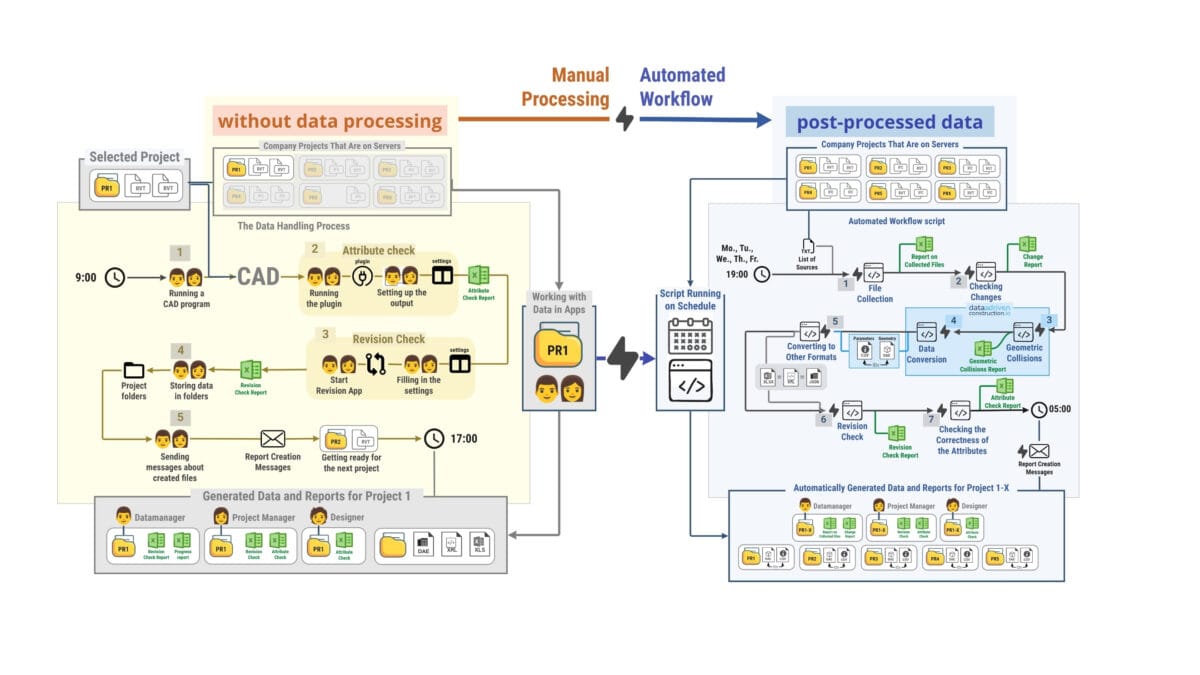

A typical sequence of data processing can be described in the context of working with data from CAD (BIM) databases (Figure 4.2-2). Traditional data processing in CAD (BIM) departments for obtaining volume tables or creating documentation based on project data occurs in the following order:

- Manual Extract: Manually opening the project - by launching a CAD (BIM) application.

- Verification: The next step involves usually executing several plug-ins or auxiliary applications aimed at data preparation and data quality assessment.

- Manual Transform: After preparation, the actual data processing begins, which requires manual operation of various software tools.

- Manual Load: The final action is the manual dissemination of information about the created documents to relevant stakeholders.

The automatic process, unlike traditional manual processing, is not limited by human desires and interests

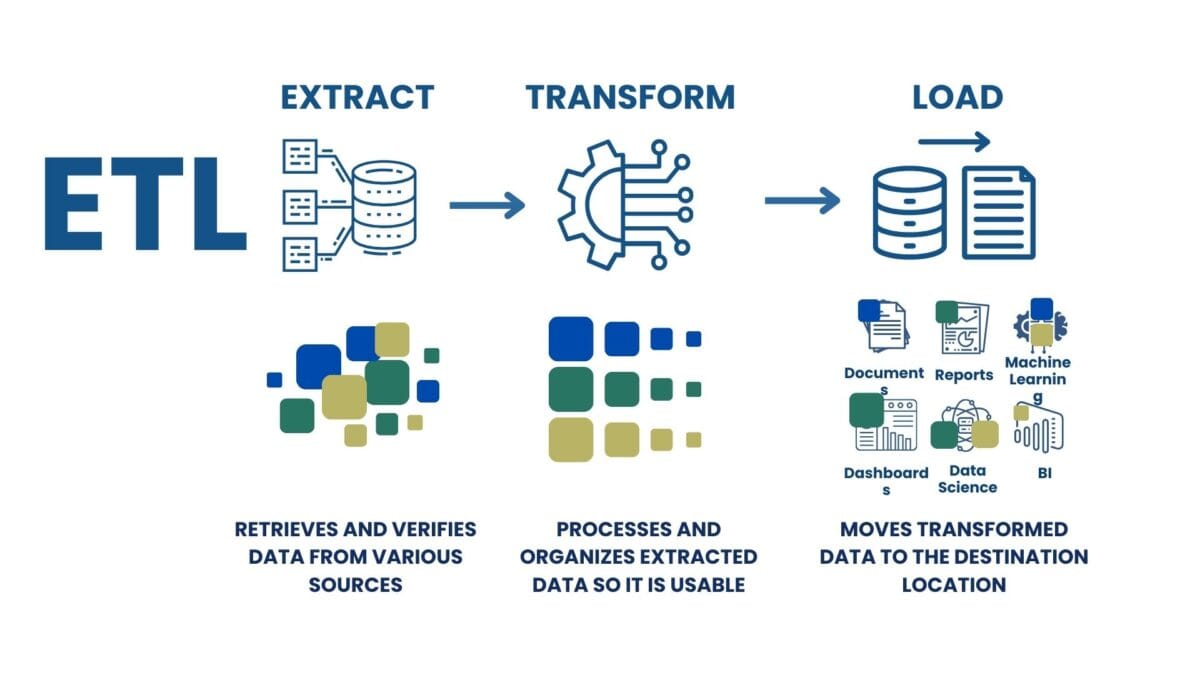

This workflow is an example of an ETL process - Extract, Transform and Load (ETL). However, unlike the automated ETL processes common in other industries, this manual ETL approach is typical and common in the construction industry.

ETL is an acronym for three key components in data processing: Extract, Transform, and Load:

- Extract: This step involves collecting data from a variety of sources. This data can range in format and structure from images to databases.

- Transform: In this step, the data is processed and transformed. This may include data cleansing, aggregation, validation, and any application of process logic. The purpose of transformation is to bring the data to the format required for the final system.

- Load: The final step where the processed data is loaded into the target system, document or repository, such as a Data Warehouse, Data Lakehouse or database.

ETL automates repetitive data processing tasks for efficient data analysis and manipulation

A traditional manual or semi-automated data process that replicates the ETL process involves a data manager or project manager who manually monitors the process and manually creates reports and documents on the process. Such traditional data processing methods take a significant amount of time in an environment where the workday is strictly limited to the time frame of 9:00 am to 5:00 pm.

In most cases, to automate such processes, companies buy ready-made ERP-like solutions, which are often customized to the company's individual desires and customized by an external developer, who ultimately determines the efficiency and effectiveness of the system and ultimately directly affects the business efficiency of the company that has purchased such a system.

In case a company is not ready to operate or buy a comprehensive ERP system where processes are performed in a semi-automated mode, one way or another the company's management will start automating the company's processes outside the ERP systems.

In the automated version of the same ETL workflow (Figure 4.2-2), the overall process looks like a modular code that starts with processing data and translating it into a open structured form. Once the structured data is received, various scripts or modules are automatically, on a schedule, run to check for changes, transform and send messages.

The automatic process, unlike traditional manual processing, is not limited by human desires and constraints

In traditional processes (Figure 4.2-2) without data pre-processing, tasks are handled manually: from running CAD (BIM) software and plug-ins to creating reports and preparing for the next project.

In an automated workflow, data processing is simplified through data ET(L) pre-processing: structuring and unification.

In traditional data processing methods, specialists work with data "as it is" that is extracted from systems or software. In automation processes, data using ETL is translated into a structured, usable form before processing.

To provide a hands-on ETL (Extract, Transform, Load) example illustrating the data table validation process discussed in the "Data Validation and Validation Results" chapter, we'll use the Pandas library alongside ChatGPT. This approach allows us to craft code examples without delving deeply into complex coding details.