ETL Load Reporting and loading to other systems

26 February 2024

Pipeline-ETL: CAD (BIM) project verification

26 February 2024ETL is traditionally associated with data processing tasks for analytics and includes both structured and unstructured data.

Pipeline, on the other hand, is a more general concept that can be applied in a variety of contexts, including but not limited to data processing.

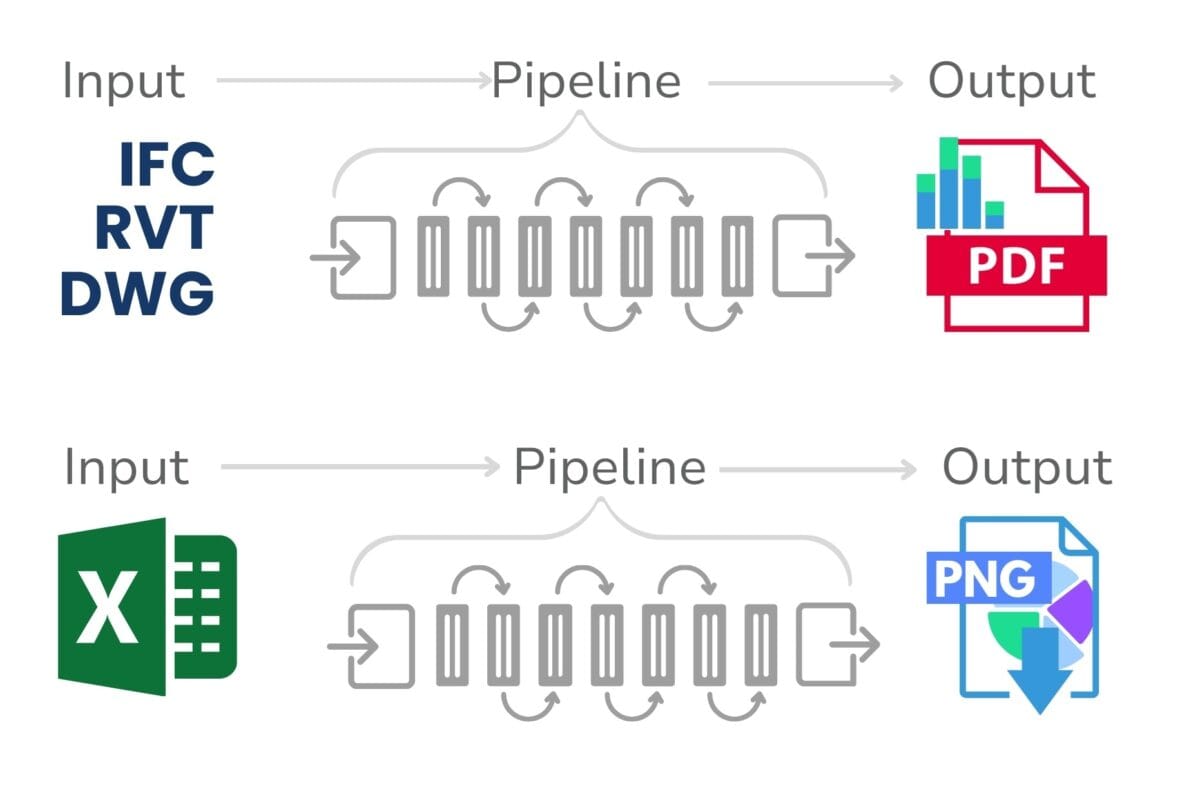

Pipeline describes any processing sequence where the output of one step is the input for the next. This can be applied not only to data, but also to task flows, operations in software, and other processes.

A Pipeline is a processing sequence where the output of one stage becomes the input for the next stage.

The use of pipelines is a key control element in process automation and data processing. Pipelines allow to organize complex sequences of data processing into a digestible, sequential and modular format. This not only improves code readability and support, but also greatly simplifies debugging and testing of various stages of data processing.

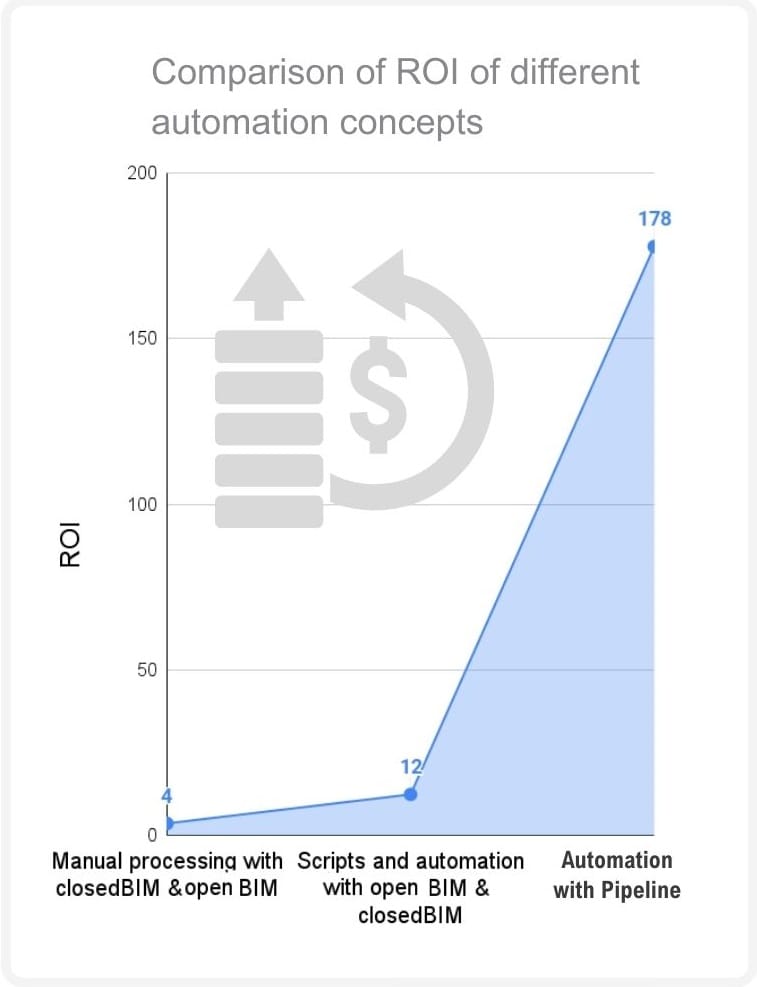

Automated data validation process reduces the time to complete tasks by tens or hundreds of times, compared to manual processing or automation by CAD (BIM) tools

Automated pipeline processes, far outperform traditional data processing methods in closed applications or expensive and complex ERP systems in terms of speed of operation and can be configured to perform tasks automatically.

Automating workflows not only increases team productivity by freeing up time for more meaningful and less routine tasks, but also serves as an important first step towards incorporating artificial intelligence (AI) technologies into business processes.