In order to transform-verify collected data in the ETL process, we will use regular expressions to check the consistency and integrity of attributes based on predefined rules. We talked more about RegEx in the chapter "Translating requirements into structured form".

Regex is the key to efficient data validation, allowing to perform automated checks against complex criteria with just a few lines of code, greatly simplifying the data validation process.

In the "Transform" step in the construction data management, regular expressions (RegEx) may for example be used to check the conformity of formats of identifiers, such as the "ID" attribute.

For example, from our experience we establish that valid values for the "ID" attribute may include "W-NEW", "W-OLD1" or "D-122" or similar values where the first character is a letter, followed by a dash and then three alphabetic characters 'NEW', 'OLD' or any three digit number.

A manager with historical data establishes requirements for attributes and their boundary values that are acceptable for use in his applications and business processes

The following RegEx pattern can be used to perform this check: ^W-NEW$|^W-OLD[0-9]+$|^D-1[0-9]{2}$. This pattern allows to check if all identifiers in the dataset match the criteria set. If any value does not match this pattern, it will result in an error.

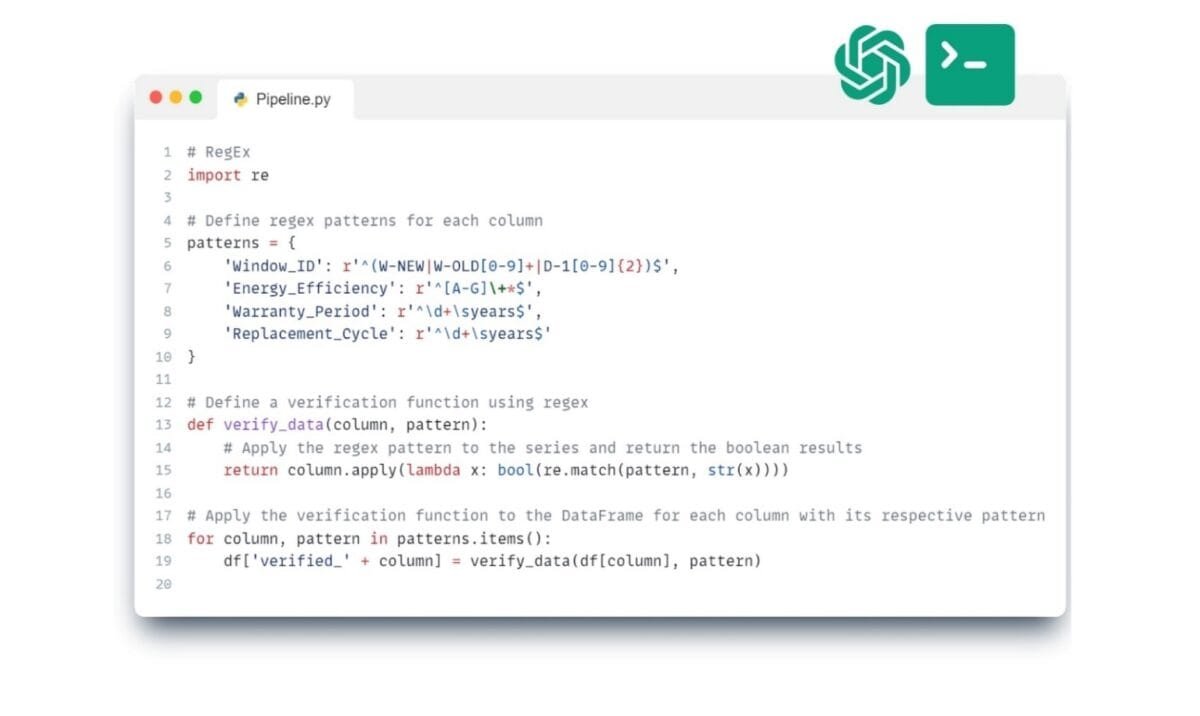

To create Python code to transform data and use the data requirements to create a result table, simply formulate a query to ChatGPT.

❏ Text request to ChatGPT:

Write code to validate DataFrame columns with regular expressions that validate identifiers in the format 'W-NEW' or 'W-OLD', energy efficiency with letters 'A' through 'G', warranty period and replacement cycle with numeric values in years ⏎

➤ ChatGPT Answer:

Python code automates the validation by applying regex patterns, ensuring that each each attribute of a data element meets industry standards for accuracy and consistency

The above automatically derived Python code uses the re (RegEx regular expressions) library to define a function that validates each attribute of a data element in a pandas DataFrame. For each specified column (attribute), it applies a RegEx pattern to check if each record matches the expected format, and adds the results as new boolean (False/True attribute) columns to the DataFrame.

Having collected and transformed the data, the only thing left to do is to upload the results into the format we need, document or dashboard.