Apache Airflow is a free and open source platform, designed to automate, orchestrate and monitor workflows (ETL -conveyors).

Working with large amounts of data is required every day:

- Download files from different sources – Extract (for example, from suppliers or customers).

- Transform this data into the desired format – Transform (structure, cleanse and validate)

- Send results for verification and create reports – Load (upload to required systems, documents, databases or dashboards.

Manual execution of such ETL processes takes considerable time and leads to the risk of human error. A change in the data source or a failure at one of the steps can cause delays and incorrect results.

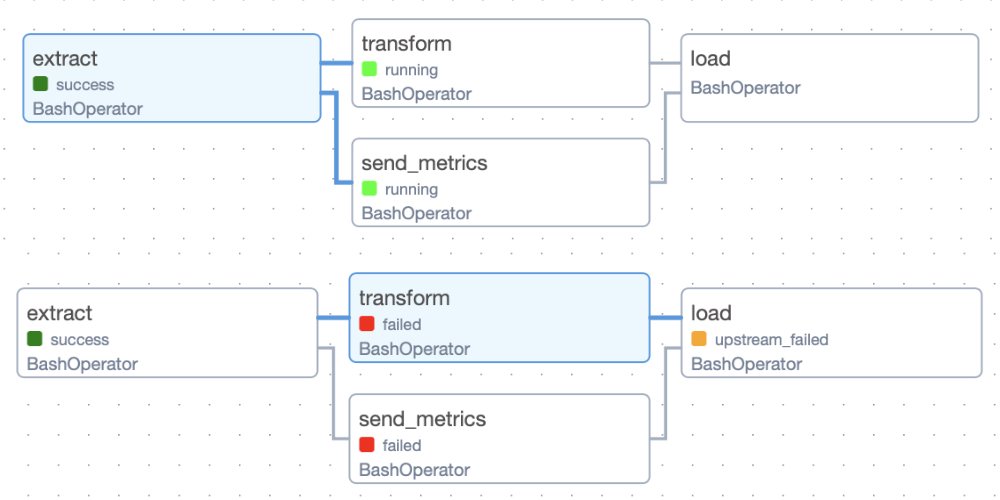

Automation tools, such as Apache Airflow, allow you to build a reliable ETL -conveyor, minimize errors, reduce processing time and ensure data correctness at every step. At the heart of Apache Airflow is the concept of DAG (Directed Acyclic Graph) – a directed acyclic graph in which each task (operator) is connected to other dependencies and executed strictly in a specified sequence. DAG eliminates cycles, which provides a logical and predictable structure of task execution.

Airflow takes care of orchestration – managing dependencies between tasks, controlling execution schedules, tracking status and automatically responding to failures. This approach minimizes manual intervention and ensures the reliability of the entire process.

Task Orchestrator is a tool or system designed to manage and control task execution in complex computing and information environments. It facilitates the process of deploying, automating, and managing task execution to improve performance and optimize resources.

Airflow is widely used for orchestration and automation of distributed computing, data processing, ETL (Extract, Transform, Load) process management, task scheduling, and other data scenarios. By default, Apache Airflow uses SQLite as the database.

An example of a simple DAG, similar to ETL, consists of tasks – Extract, Transform and Load. In the graph, which is controlled through the user interface (Fig. 7.4-1), the order of execution of tasks (code fragments) is defined: for example, extract is executed first, then transform (and sending_metrics), and load task completes the work. When all tasks are completed, the data loading process is considered successful.