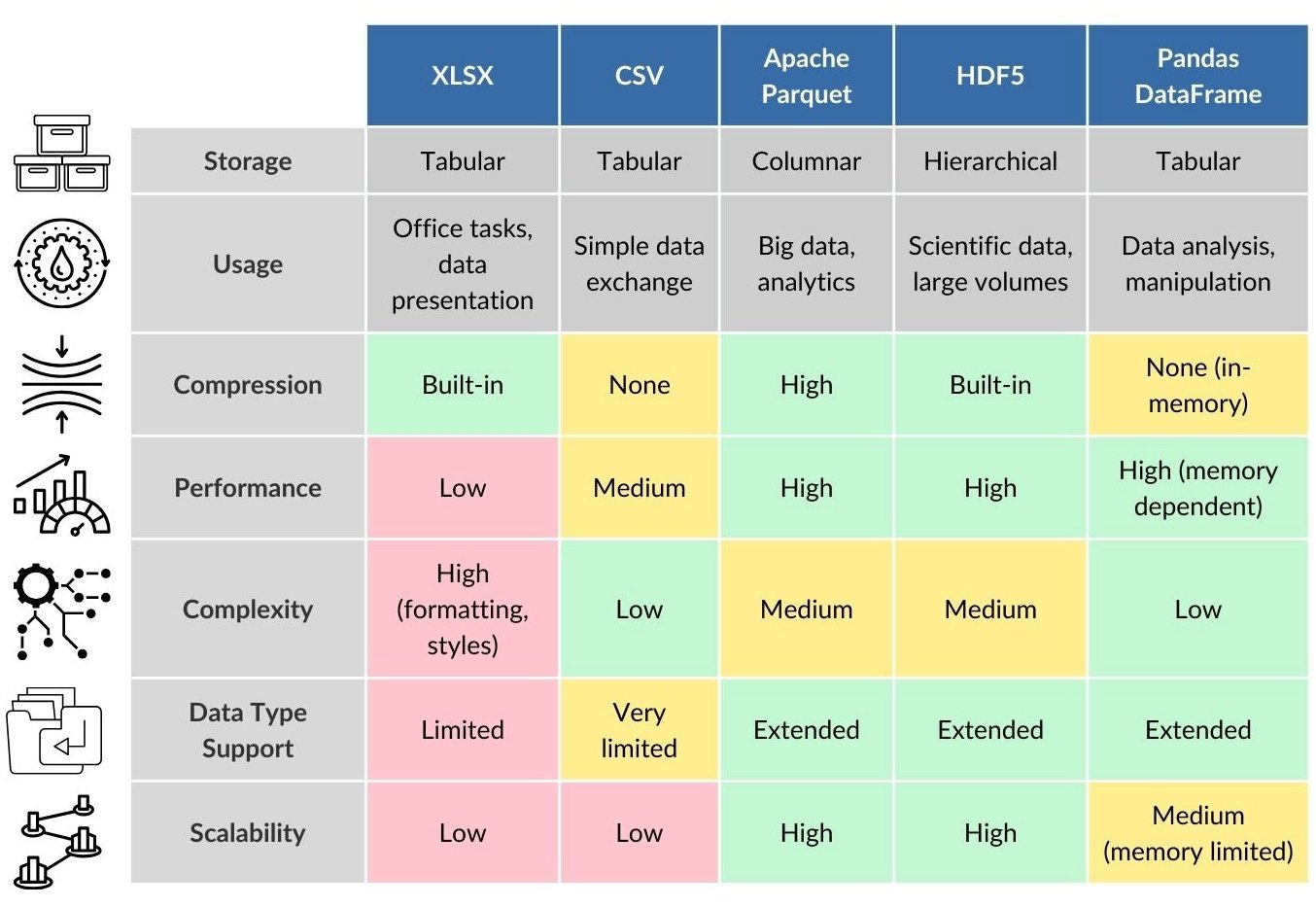

Storage formats play a key role in the scalability, reliability, and performance of analytics infrastructure. For data analysis and processing – such as filtering, grouping, and aggregation – our examples used Pandas DataFrame – a popular structure for working with data in RAM.

However, Pandas DataFrame does not have its own storage format, so after processing is complete, the data is exported to one of the external formats – most often CSV or XLSX. These tabular formats are easy to exchange and compatible with most external systems, but have a number of limitations: low storage efficiency, lack of compression and poor versioning support:

CSV (Comma-Separated Values): a simple text format widely supported by various platforms and tools. It is easy to use, but does not support complex data types or compression.

XLSX (Excel Open XML Spreadsheet): a Microsoft Excel file format that supports sophisticated features such as formulas, charts, and styling. While it is convenient for manual data analysis and visualization, it is not optimized for large-scale data processing.

In addition to the popular tabular XLSX and CSV, there are several popular formats for efficiently storing structured data (Fig. 8.1-2), each with unique advantages depending on specific data storage and analysis requirements:

Apache Parquet: a columnar data storage file format optimized for use in data analysis systems. It offers efficient data compression and encoding schemes, making it ideal for complex data structures and big data processing.

Apache ORC (Optimized Row Columnar): Similar to Parquet, ORC provides high compression and efficient data storage. It is optimized for heavy read operations and is well suited for storing data lakes.

JSON (JavaScript Object Notation): although JSON is not as efficient in terms of data storage compared to binary formats such as Parquet or ORC, it is very accessible and easy to work with, making it ideal for scripts where readability and web compatibility are important.

Feather: a fast, lightweight, and easy-to-use analytics-oriented binary columnar data storage format. It is designed to efficiently transfer data between Python (Pandas) and R, making it an excellent choice for projects involving these programming environments.

HDF5 (Hierarchical Data Format version 5): designed for storing and organizing large amounts of data. It supports a wide range of data types and is well suited for working with complex collections of data. HDF5 is particularly popular in scientific computing due to its ability to efficiently store and access large datasets.

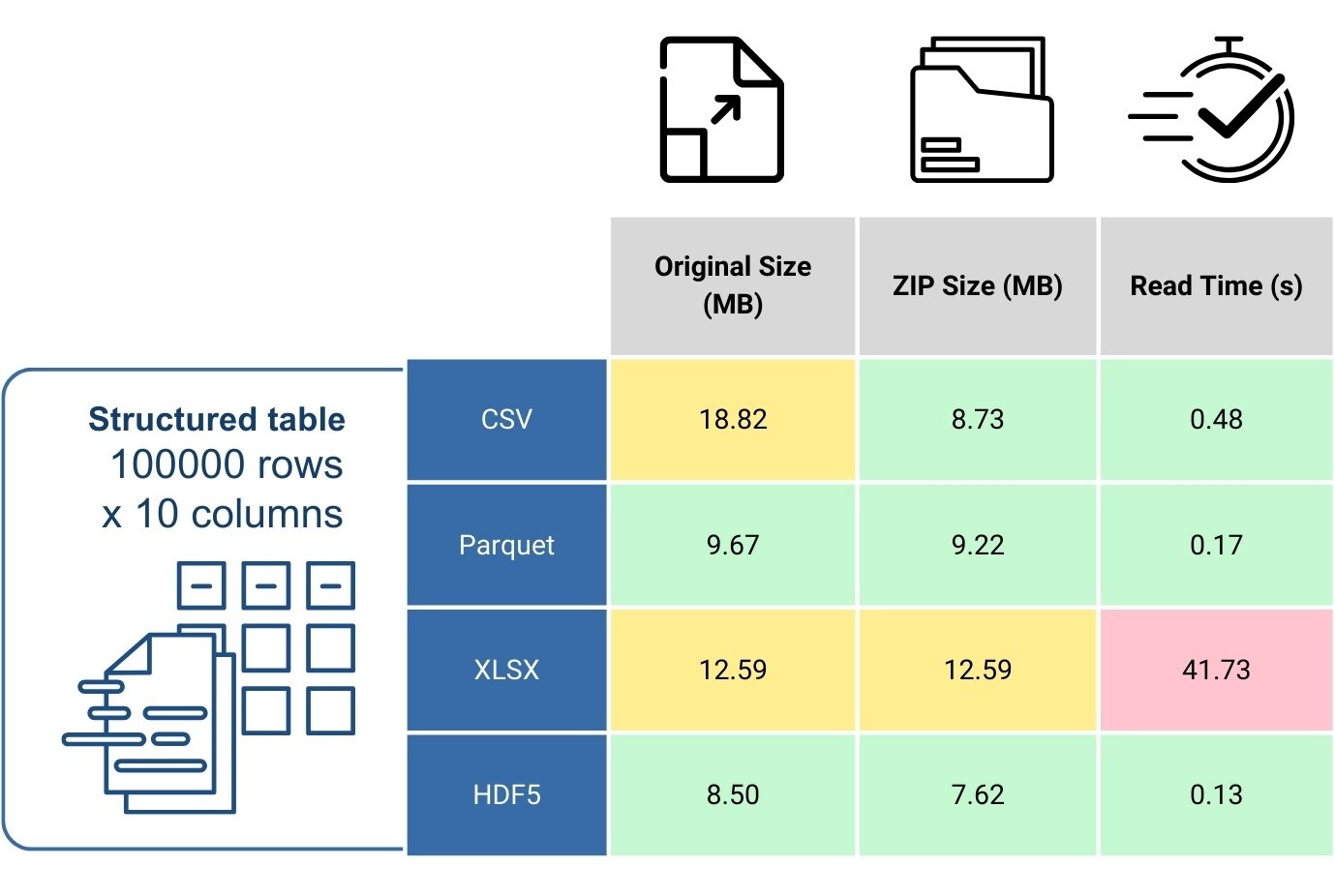

To conduct a comparative analysis of the formats used at the Load stage of the ETL process, a table showing file sizes and reading times was created (Fig. 8.1-3). Files with identical data were used in the study: the table contained 10,000 rows and 10 columns filled with random values.

The following storage formats are included in the study: CSV, Parquet, XLSX and HDF5, as well as their compressed versions in ZIP archives. The raw data were generated using the NumPy library and represented as a Pandas DataFrame structure. The testing process consisted of the following stages:

- File saving: the dataframe is saved in four different formats: CSV, Parquet, XLSX, and HDF5. Each format has unique features in the way it stores data, affecting file size and read speed.

- ZIP file compression: to analyze the effectiveness of standard compression, each file was further compressed into a ZIP archive.

- File reading (ETL – Load): reading time was measured for each file after its unpacking from ZIP. This allows estimating the speed of data access after extraction from the archive.

It is important to note that Pandas DataFrame was not used directly in the size or read time analysis, as it does not represent an independent storage format. It served only as a intermediate structure for generation and subsequent saving of data into different formats.

CSV and HDF5 files exhibit (Fig. 8.1-3) high compression efficiency, significantly reducing their size when packed in ZIP, which can be particularly useful in scenarios requiring storage optimization. XLSX files, on the other hand, are virtually uncompressible and their size in ZIP remains comparable to the original, making them less favorable for use in large data volumes or in environments where speed of data access is important. In addition, the read time for XLSX is significantly higher compared to other formats, making it less preferable for fast data read operations. Apache Parquet has demonstrated high performance for analytical tasks and large data volumes due to its columnar structure.