In this chapter, we look at the digital transformation roadmap and identify the key steps required to implement a data-driven approach that can help transform both the corporate culture and the company’s information ecosystem.

According to McKinsey’s study “Why Digital Strategies Fail” (2018), there are at least five reasons (M. Quarterly, “Why digital strategies fail,” 25 Jan. 2018) why companies fail to achieve digital transformation goals

- Blurred definitions: executives and managers have different understandings of what “digital” means, leading to misunderstandings and inconsistencies.

- Misunderstanding the digital economy: many companies underestimate the magnitude of the changes that digitalization is bringing to business models and industry dynamics (Fig. 10.1-6).

- Ignoring ecosystems: companies focus on individual technology solutions (data silos), overlooking the need to integrate into broader digital ecosystems (Fig. 2.2-2, Fig. 4.1-12).

- Underestimation of digitalization by competitors: managers do not take into account that competitors are also actively adopting digital technologies, which may lead to a loss of competitive advantage.

- Missing the duality of digitalization: CEOs delegate responsibility for digital transformation to other executives, which bureaucratizes control and slows down the change process.

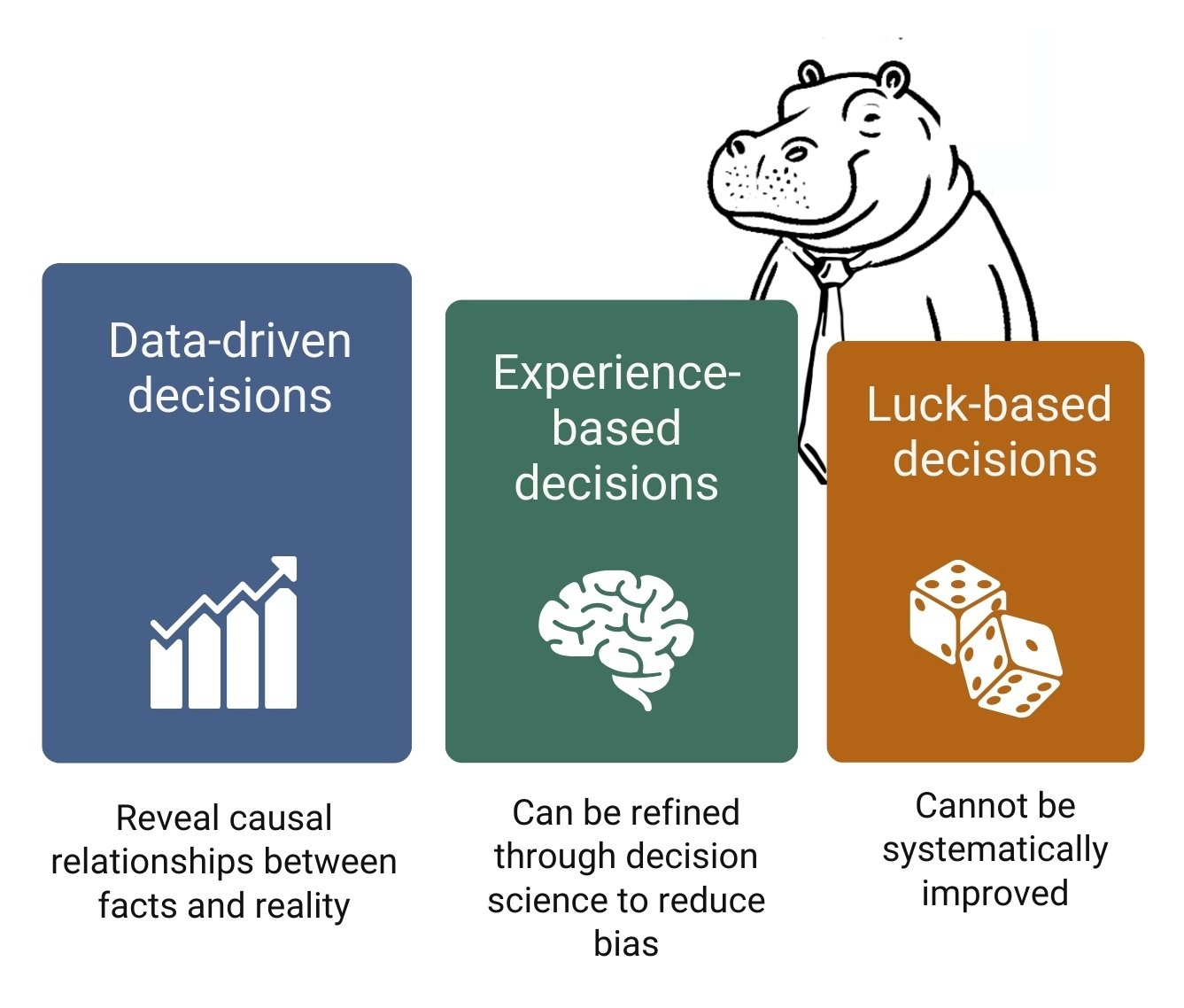

Addressing these challenges requires a clear understanding and alignment of digital strategies at all levels of the organization. Before building a digital strategy, it is important to understand the starting point. Many organizations tend to adopt new tools and platforms without having a complete picture of the current state.

Step 1: Conduct an audit of your current systems and data.

Before changing processes, it’s important to understand what’s already in place. Conducting an audit allows you to identify weaknesses in data management and understand what resources can be used. An audit is a kind of “X-ray” of your business processes. It allows you to identify areas of risk and determine which data is critical to your project or business and which is secondary.

Key Actions:

- Map your IT environment (in Draw.io, Lucidchart, Miro, Visio or Canva). List the systems used (ERP, CAD, CAFM, CPM, SCM and others) involved in your processes and which we discussed in the chapter “Technology and Management Systems in Modern Construction” (Fig. 1.2-4)

- Assess data quality issues for each system for the frequency of duplicates, possible missing values, and format inconsistencies in each system.

- Identify “pain points” – places where processes can break down or often require manual intervention – imports, exports and additional validation processes.

If you want the team to trust the reports, you need to make sure the data is correct from the start.

A quality data audit will show you what data:

- Needs further development (automatic purification processes or additional transformation needs to be set up)

- They are “garbage” that only clogs up systems and can be gotten rid of by not using them in processes anymore.

It is possible to conduct such an audit on your own. But sometimes it is useful to engage an external consultant – especially from other industries: a fresh perspective and independence from construction “peculiarities” will help to soberly assess the status quo and avoid the typical pitfalls of bias towards certain solutions and technologies.

Step 2: Identify key standards for data harmonization.

After the audit, it is necessary to create common rules for working with data. As we discussed in the chapter “Standards: From Random Files to an Intelligent Data Model”, this will help eliminate siloed information flows.

Without a single standard, each team will continue to work “their own way” and you will maintain a “zoo” of integrations where data is lost with every conversion.

Key Actions:

- Select the data standards to exchange information between systems:

- For tabular data, this can be structured formats like CSV, XLSX or more efficient formats like Parquet

- For exchange of loosely structured data and documents: JSON or XML

- Master working with data models:

- Start by parameterizing the tasks at the level of the conceptual data model – as described in the chapter “Data modeling: conceptual, logical and physical model” (Fig. 4.3-2).

- As you delve deeper into the business process logic, move to formalizing requirements using parameters in the logical and physical models (Fig. 4.3-6)

- Identify key entities, their attributes and relationships within processes, and visualize these relationships – both between entities and between parameters (Fig. 4.3-7).

- Use regular expressions (RegEx) to validate and standardize data (Fig. 4.4-7), as we discussed in the chapter “Structured Requirements and RegEx Regular Expressions”. RegEx is not a complex but an extremely important topic in the work of creating requirements at the physical data model level.

Without data-level standards and process visualization, it is impossible to provide a consistent and scalable digital environment. Remember, “bad data is expensive.” And the cost of error increases as a project or organization becomes more complex. Unifying formats, defining naming, structure and validation rules is an investment in the stability and scalability of future solutions.

Step 3. Implement DataOps and automate processes.

Without a well-defined architecture, companies will inevitably be faced with disparate data contained in siloed information systems. Data will be unintegrated, duplicated in multiple locations, and costly to maintain.

Imagine that data is water, and the data architecture is the complex system of pipelines that transports that water from its storage source to its point of use. It is the data architecture that determines how information is collected, stored, transformed, analyzed, and delivered to end users or applications.

DataOps (Data Operations) is a methodology that integrates the collection, cleansing, validation, and utilization of data into a single automated process flow, as we discussed in detail in Part 8 of the book.

Main Actions:

- Create and customize ETL -conveyors to automate processes:

- Extract: organize automatic data collection from PDF documents (Fig. 4.1-2, Fig. 4.1-5, Fig. 4.1-7), Excel spreadsheets, CAD -models (Fig. 7.2-4), ERP -systems and other sources you work with

- Transform: set up automatic processes to transform data to a single structured format and automate calculations that will take place outside of closed applications (Fig. 7.2-8)

- Load: try creating automatic data uploads to summary tables, documents, or centralized repositories (Fig. 7.2-9, Fig. 7.2-13, Fig. 7.2-16)

- Automate the calculation and QTO (Quantity Take-Off) processes as we discussed in the chapter “QTO Quantity Take-Off: Grouping Project Data by Attributes”:

- Configure automatic extraction of volumes from CAD -models, using APIs, plug-ins or reverse engineering tools (Fig. 5.2-5).

- Create rules for grouping elements for different classes by attributes in the form of tables (Fig. 5.2-12)

- Try to automate frequently repeated volume and cost calculations outside of modular closed systems (Fig. 5.2-15)

- Start using Python and Pandas to process data, as we discussed in the chapter “Python Pandas: an indispensable tool for working with data”:

- Apply DataFrame to work with XLSX files and automate the processing of tabular data (Fig. 3.4-6)

- Automate information aggregation and transformation through various Python libraries

- Use the LLM to simplify writing ready-made code blocks and entire Pipelines (Fig. 7.2-18)

- Try building a Pipeline in Python, which finds errors or sees anomalies and sends a notification to the responsible person (e.g., the project manager) (Fig. 7.4-2)

Automation based on DataOps principles allows you to move from manual and fragmented data handling to sustainable and repeatable processes. This not only reduces the burden on employees who deal with the same transformations every day, but also dramatically increases the reliability, scalability and transparency of the entire information system.

Step 4: Create an open data governance ecosystem.

Despite the development of closed modular systems and their integration with new tools, companies face a serious problem – the growing complexity of such systems outpaces their usefulness. The initial idea of creating a single proprietary platform covering all business processes has led to excessive centralization, where any changes require significant resources and time to adapt.

As we discussed in the chapter “Corporate Mycelium: How Data Connects Business Processes,” effective data management requires an open and unified ecosystem that connects all sources of information.

Key elements of the ecosystem:

- Select an appropriate data store:

- For tables and calculations use databases – for example, PostgreSQL or MySQL (Fig. 3.1-7).

- For documents and reports, cloud storage (Google Drive, OneDrive) or systems that support JSON format may be suitable

- Familiarize yourself with the capabilities of Data Warehouse, Data Lakes, and other tools for centralized storage and analysis of large amounts of information (Fig. 8.1-8)

- Implement solutions to access proprietary data:

- If you use proprietary systems, set up API or SDK access to them to retrieve data for external processing (Fig. 4.1-2)

- Familiarize yourself with the potential of reverse engineering tools for CAD formats (Fig. 4.1-13)

- Set up ETL-Pipelines that periodically collect data from applications or servers, convert it into open structured formats, and save it to repositories (Fig. 7.2-3)

- Discuss within the team how to provide access to data without the need for proprietary software

- Remember: data is more important than interfaces. It is the structure and availability of information, not specific user interface tools, that provide long-term value

- Think about creating a Center of Excellence (CoE) for data, as we discussed in the “Center of Excellence (CoE) for Data Modeling” chapter, or how you can provide data expertise in other ways (Fig. 4.3-9)

The data management ecosystem creates a unified information space in which all project participants work with consistent, up-to-date and verified information. It is the basis for scalable, flexible and reliable digital processes