Today’s design data architecture is undergoing fundamental changes. The industry is moving away from bulky, isolated models and closed formats towards more flexible, machine-readable structures focused on analytics, integration and process automation. However, the transition to new formats alone does not guarantee efficiency – the quality of the data itself is inevitably at the center of attention.

In the pages of this book, we talk a lot about formats, systems, and processes. But all these efforts are meaningless without one key element: data that can be trusted. Data quality is a cornerstone of digitalization that we will return to throughout the parts that follow.

Modern construction companies – especially large ones – use dozens and sometimes thousands of different systems and databases (Fig. 4.2-1). These systems must not only be filled with new information on a regular basis, but also interact effectively with each other. All new data generated as a result of processing incoming information are integrated into these environments and serve to solve specific business tasks.

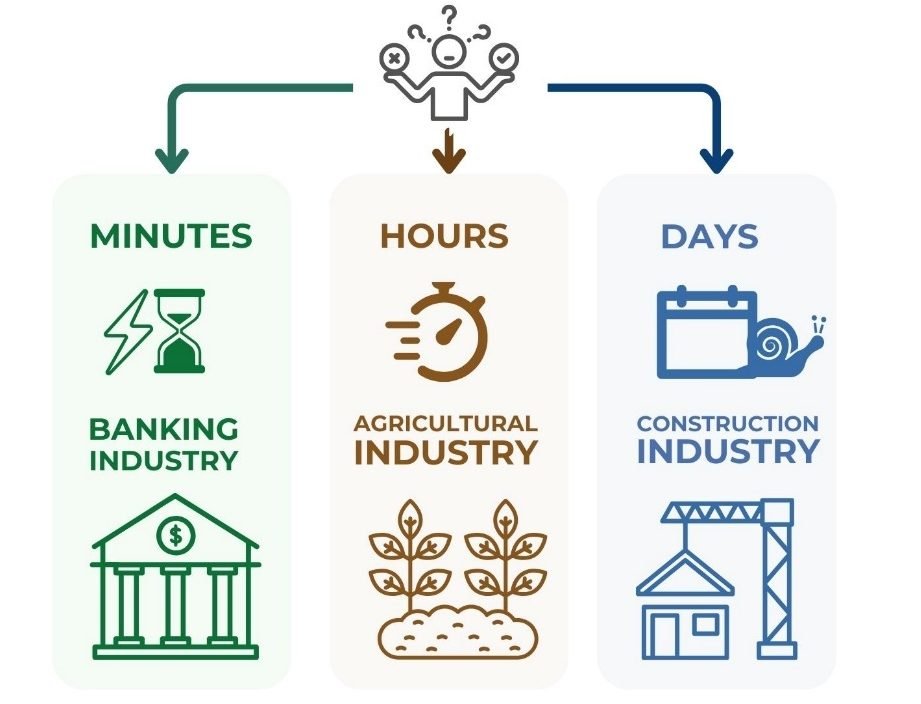

And if earlier decisions on specific business tasks were made by top managers – so-called HiPPOs (Fig. 2.1-9) – on the basis of experience and intuition, today, with the sharp increase in the volume of information, this approach is becoming controversial. Automated analytics, which works with real-time data, is replacing it.

“Traditional-manual” executive-level business process discussions will shift toward operational analytics, which requires quick responses to business queries.

The era when accountants, foremen and estimators manually generated reports and summary tables and project data showcases over days and weeks is a thing of the past. Today, speed and timeliness of decision-making are becoming a key factor in competitive advantage.

The main difference between the construction industry and more digitally developed industries (Fig. 4.2-1) is the low level of data quality and standardization. Outdated approaches to the generation, transmission and processing of information slow down processes and create chaos. The lack of uniform data quality standards hinders the implementation of end-to-end automation

One of the main challenges remains the poor quality of input data, as well as the lack of formalized processes for their preparation and verification. Without reliable and consistent data, effective integration between systems is impossible. This leads to delays, errors and increased costs at every stage of the project lifecycle.

In the following sections of the book, we detail how you can improve data quality, standardize processes, and shorten the path from information to quality, validated, and consistent data.