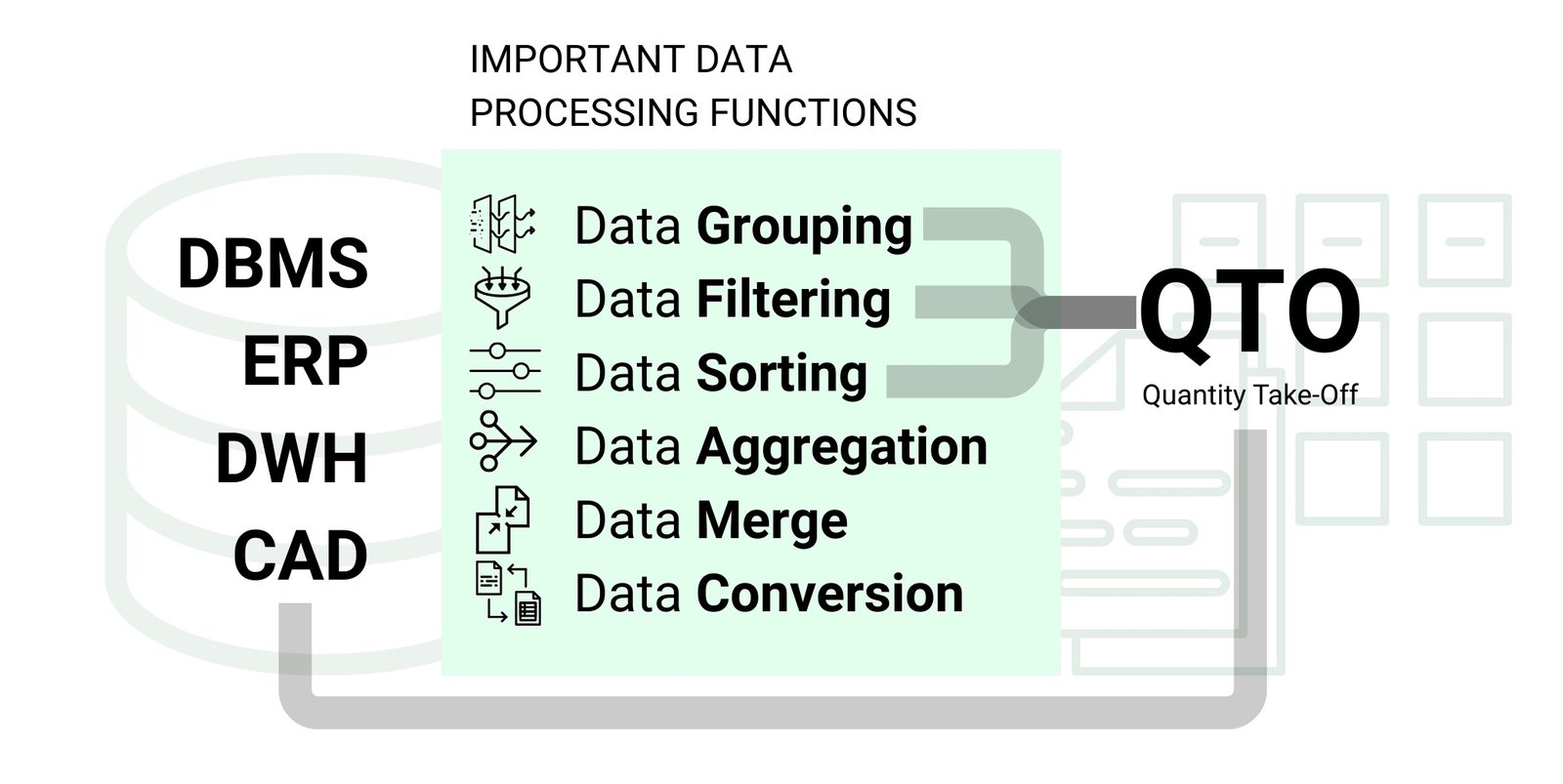

QTO (Quantity Take-Off) in construction is the process of extracting the quantitative characteristics of the elements required to realize a project. In practice, QTO is often a semi-manual process involving data collection from various sources: PDF documents, DWG drawings and CAD models.

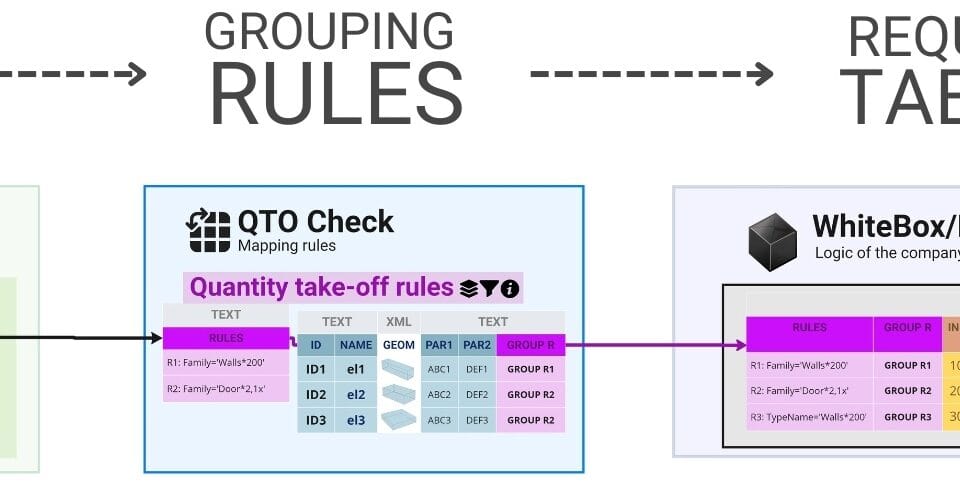

When working with data extracted from CAD databases, the QTO process is realized as a sequence of filtering, sorting, grouping and aggregation operations. Model elements are selected by class, category and type parameters, and then their quantitative attributes – such as volume, area, length or quantity – are summarized according to the calculation logic (Fig. 5.2-2).

The QTO (filtering and grouping) process allows to systematize data, form specifications and prepare source information for calculating estimates, purchases and work schedules. The basis of QTO is the classification of elements according to the type of measured attributes. For each element or group of elements the corresponding quantitative measurement parameter is selected. For example:

- Length attribute (curbstone – in meters)

- Area attribute (drywall work – in square meters)

- Volume attribute (concrete works – in cubic meters)

- Quantity attribute (windows – per piece)

In addition to the volumetric characteristics generated mathematically from the geometry, after grouping QTOs, overrun factors are often applied in calculations (Fig. 5.2-12 e.g. 1.1 to account for 10% in logistics and installation) – correction values that take into account losses, installation, storage or transportation features. This makes it possible to predict the actual consumption of materials more accurately and to avoid both shortages and overstocking on the construction site.

An automated quantity take-off process (QTO) is essential for producing accurate calculations and estimates, reducing human error in the processes of finding volume specifications and preventing over- or under-ordering of materials.

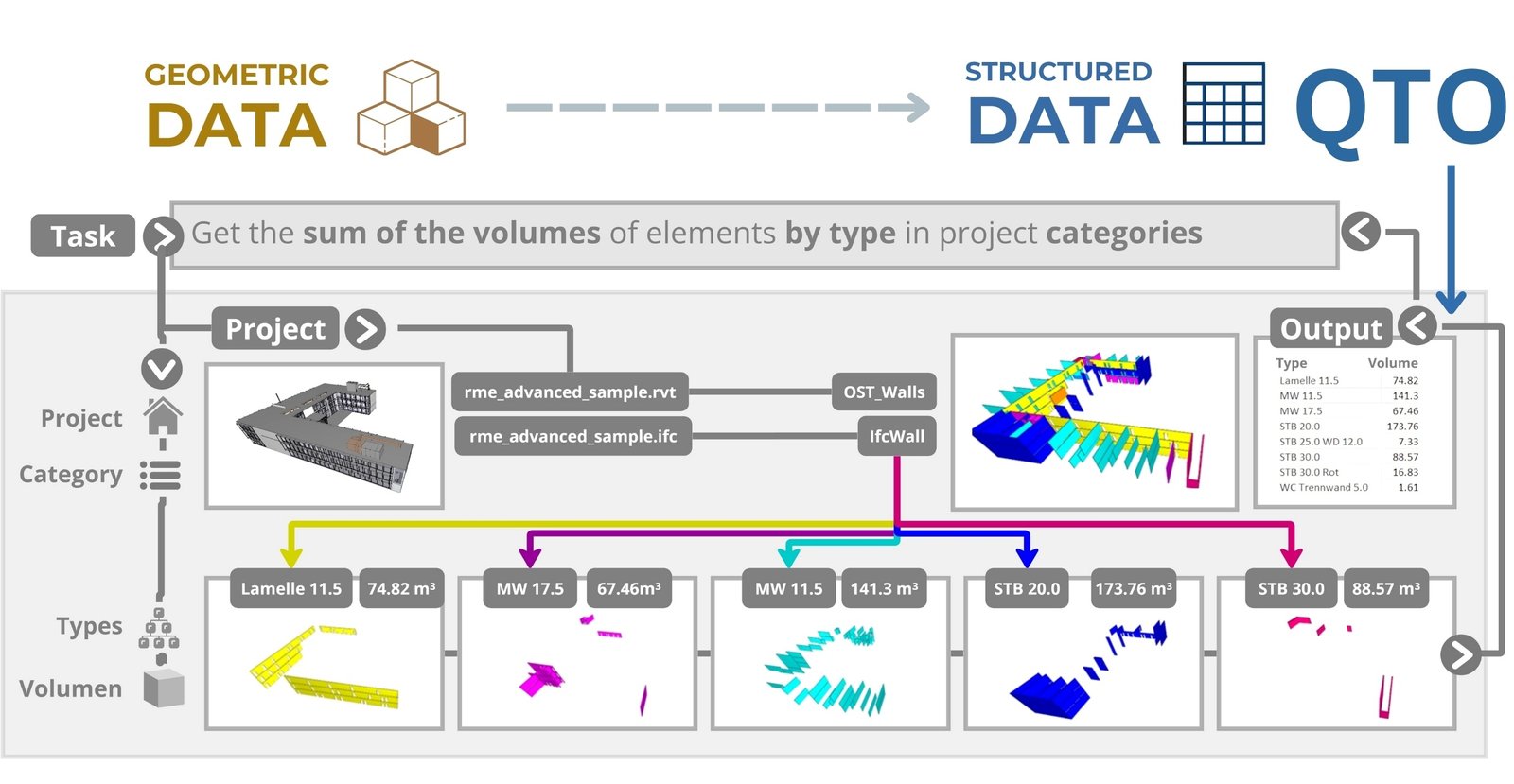

As an example of QTO process, let’s consider a common case when it is necessary to show from CAD database a table-specification of volumes by element types for a certain category, classes of elements. Let’s group all project elements by type from the CAD project wall category and summarize the volume attributes for each type to present the result as a QTO volume table (Fig. 5.2-3).

In the example of a typical CAD project (Fig. 5.2-3), all wall category elements within the CAD database are grouped by wall type, e.g. “Lamelle 11.5”, “MW 11.5” and “STB 20.0”, and have well-defined volume attributes represented in metric cubes.

The goal of the manager, who is at the interface between designers and calculation specialists, is to obtain an automated table of volumes by element type in the selected category. Not only for a specific project, but also in a universal form applicable to other projects with a similar model structure. This allows the approach to be scalable and allows data to be reused without duplication of effort.

Gone are the days when experienced designers and estimators used to arm themselves with a ruler, carefully measuring every line on paper or PDF -plans – a tradition that has not changed over the past millennia. With the development of 3D -modeling, where the geometry of each element is now directly linked to automatically calculated volumetric attributes, the process of determining volumes and QTO quantities has become automated.

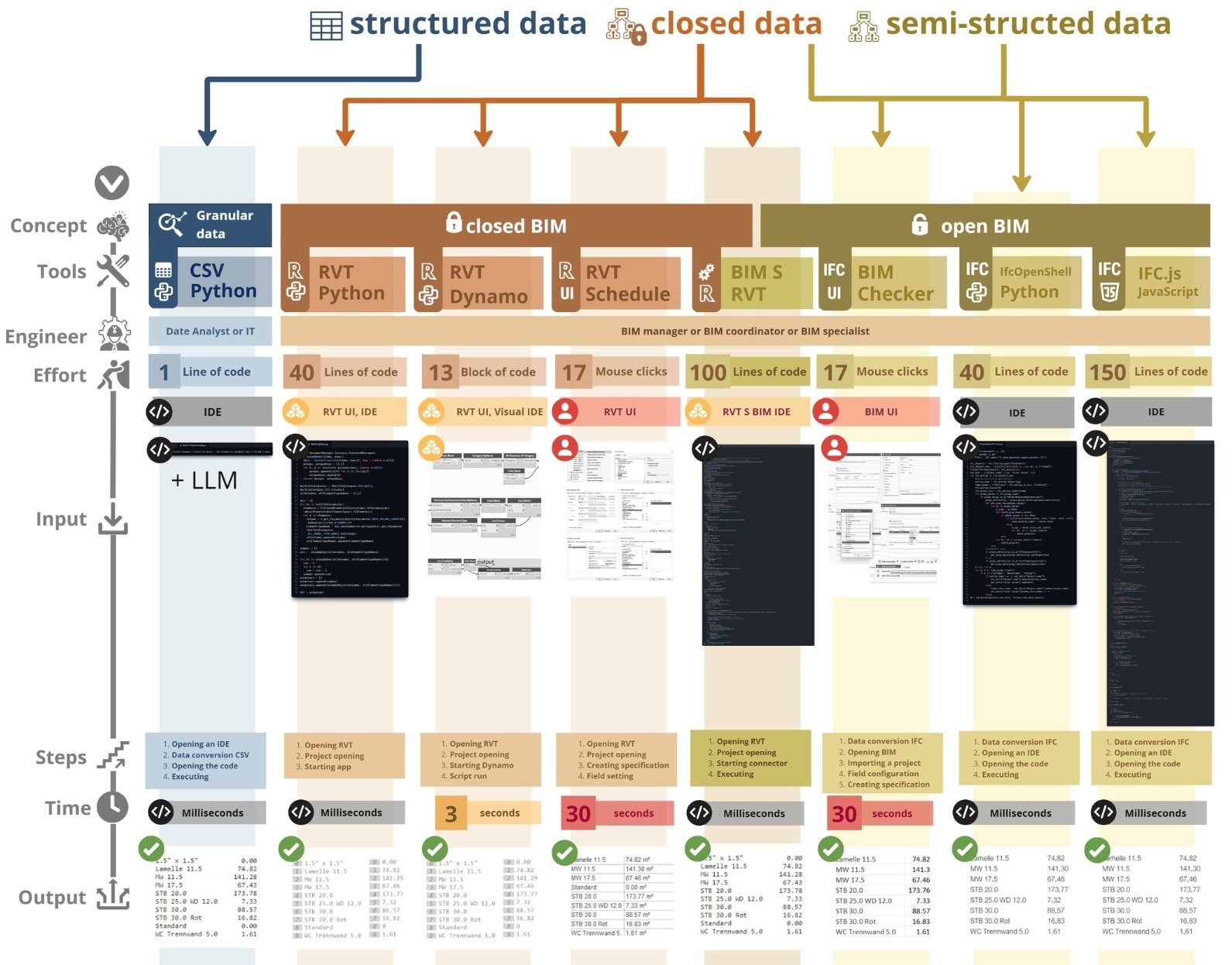

In our example, the task is to “select a category of walls in a project, group all elements by type, and present the scope attribute information in a structured, tabular format” so that this table can be used by dozens of other professionals for costing calculations, logistics, schedules, and other business cases (Fig. 6.1-3).

Due to the closed nature of CAD data not every specialist today can use direct access to the CAD database (the reasons and solutions to the access problem are detailed in the sixth part of the book). Therefore, many are forced to turn to specialized BIM tools based on the concepts of open BIM and closed BIM (А. Boiko, “Lobbykriege um Daten im Bauwesen | Techno-Feudalismus und die Geschichte von BIMs,” 2024). When working with specialized BIM -tools or directly in the CAD program environment, the table with QTO (Quantity Take-Off) results can be generated in different ways – depending on whether manual interface or software automation is used.

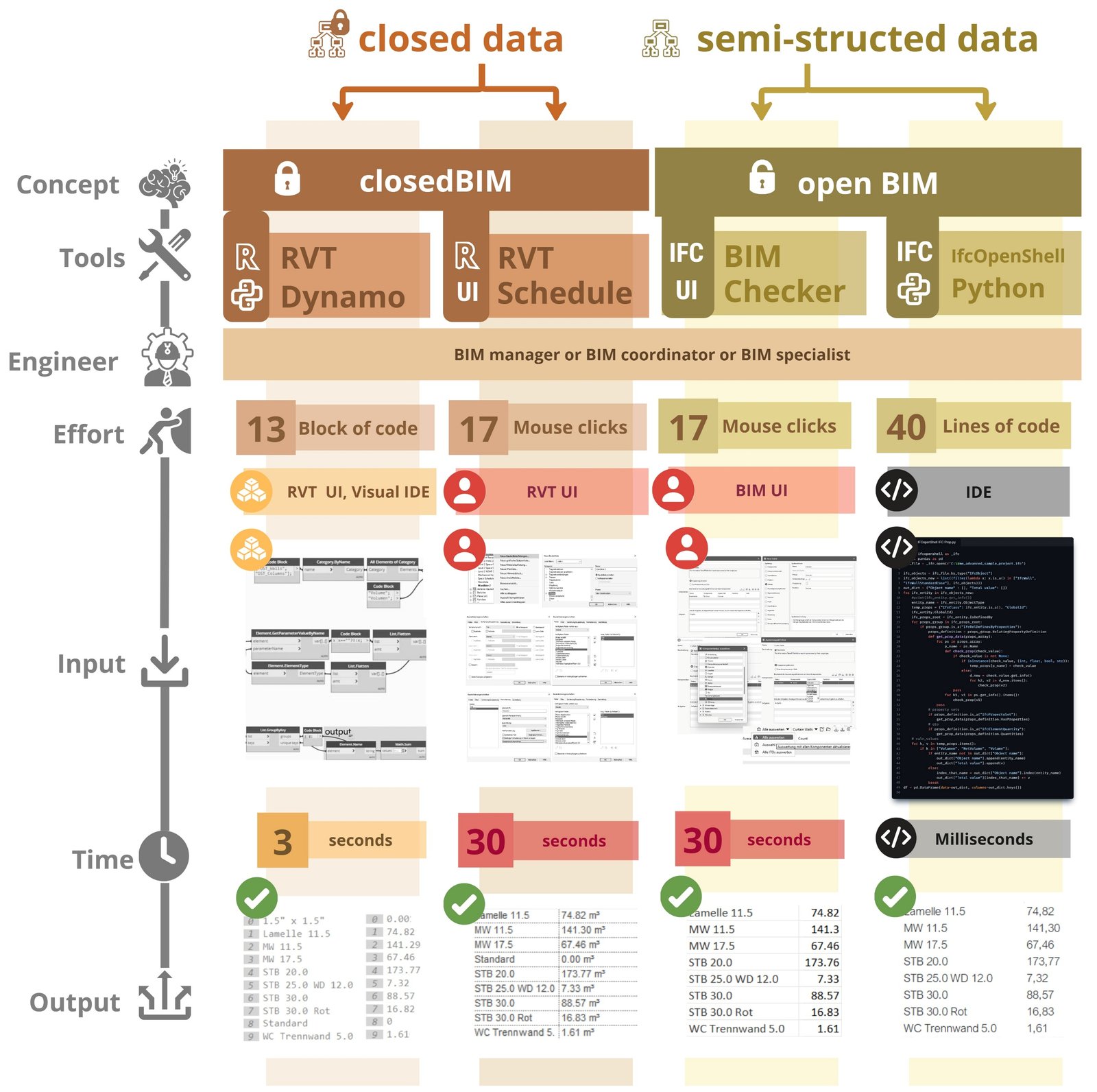

For example, using the user interface of CAD (BIM) software, it is enough to perform about 17 actions (button clicks) to get a ready table of volumes (Fig. 5.2-4). However, the user must have a good understanding of the model structure and functions of the CAD (BIM) software.

If automation is applied through program code or through plug-ins and API tools within CAD programs, the number of manual steps to obtain the volume tables is reduced, but 40 to 150 lines of code will need to be written, depending on the library or tool used:

- IfcOpSh (open BIM) or Dynamo IronPython (closed BIM) – allow you to get a QTO table from a CAD format or CAD program in just ~40 lines of code.

- IFC_js (open BIM) – requires approximately 150 lines of code to extract voluminous attributes from the IFC model.

- Interface CAD tools (BIM) – allows you to get the same result manually, in 17 mouse clicks.

The result is the same – a structured table with volume attributes for a group of elements. The only difference is the labor costs and the necessary level of technical training of the user (Fig. 5.2-4). Modern tools, in relation to manual collection of volumes, significantly speed up the QTO process and reduce the probability of errors. They allow data to be extracted directly from the project model, eliminating the need to manually recalculate volumes from drawings, as was done in the past.

Regardless of the method used – whether open BIM or closed BIM – it is possible to obtain an identical QTO – table with project element volumes (Fig. 5.2-4). However, when working with project data in CAD – (BIM-) concepts, users depend on specialized tools and APIs, provided by vendors (Fig. 3.2-13). This creates additional layers of dependency and requires learning unique data schemas while limiting direct access to the data.

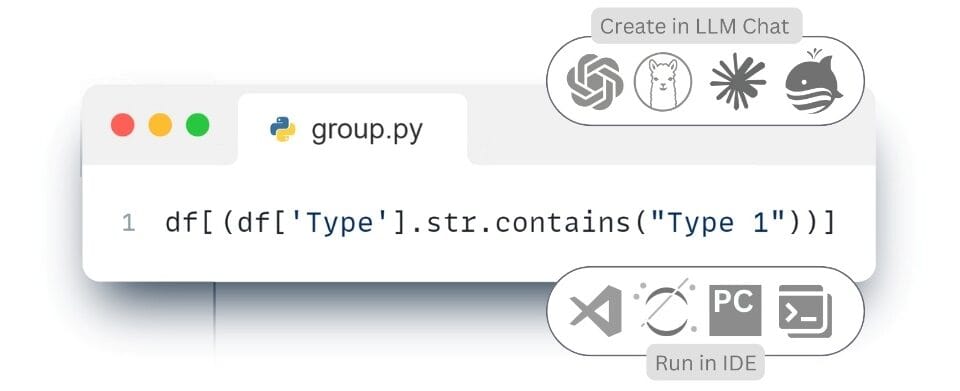

Due to the closed nature of CAD-data, obtaining QTO-tables and other parameters complicates the automation of calculations and integration with external systems. By using tools for direct access to databases and transferring CAD project data using reverse engineering tools into an open structured dataframe format (Fig. 4.1-13), an identical QTO table can be obtained with just one line of code (Fig. 5.2-5 – variant with granular data).

When using open structured data from CAD projects, as mentioned in the chapter “Converting CAD (BIM) data into structured form”, the grouping process, QTO, is greatly simplified.

Approaches based on the use of open structured data or direct access to CAD model databases are free from the marketing constraints associated with the acronym BIM. They rely on proven tools long used in other industries (Fig. 7.3-10 ETL process).

According to the McKinsey study “Open Data: Unleash Innovation and Productivity with Streaming Information” (McKinsey Global Institute, “Open data: Unlocking innovation and performance with liquid information,” October 2013) conducted in 2013, the use of open data can create opportunities for savings of $30 to $50 billion per year in the design, engineering, procurement, and construction of electric power facilities. This translates into a 15 percent savings in construction capital costs.

Working with open structured (granular) data simplifies information retrieval and processing, reduces dependence on specialized BIM platforms, and opens the door to automation without the need to use proprietary systems or parametric and complex data models from CAD formats.