A modern solution for construction data integration without programming

Automation for Everyone: How n8n Is Revolutionizing Business Workflows

The construction industry faces a critical challenge: dozens of disconnected programs and systems operate in isolation. Data volume has grown from 15 zettabytes in 2015 to 181 zettabytes in 2025, with 90% of all existing data created in just the last few years. Despite this explosive growth, most information remains locked in siloed systems.

According to Deloitte (2016), the average construction professional uses 3.3 software applications daily, but only 1.7 of them are integrated. By the mid-2020s, large construction companies may have thousands of different systems requiring seamless coordination. This lack of integration drives the urgent need for effective automation solutions in the construction industry.

Most workflows in the industry represent an endless cycle: Email → Excel → PDF → Excel → PDF → Email. Copying, renaming files, sending identical documents — these operations waste hours every week for professionals.

n8n: Revolutionizing Construction Process Automation

n8n ("n-eight-n") is a free, open-source tool from Berlin for automating any process: file operations, notifications, emails, and even AI tasks. No coding required: the interface uses drag-and-drop, similar to Dynamo or Grasshopper.

The platform runs on Windows, Mac, and Linux — both offline and online. Your first workflow can be set up in under 30 minutes.

Key advantages of n8n:

A major challenge in using new technologies is that data, while abundant, remains fragmented, unstructured, and often incompatible between different systems and programs. Tools exist, but data chaos keeps the industry trapped in manual processes. The solution is automation — accessible and understandable for everyone, not just programmers.

(Source: Data Driven Construction)

What Makes n8n Different:

Essential Features Everyone Should Know

1. Visual Workflow Editor

Unlike traditional automation tools that require scripting, n8n provides a visual canvas where you connect nodes representing different services and actions. Each node has inputs and outputs that clearly show data flow.

2. Error Handling and Debugging

n8n excels at error management:

- Error workflows: Create separate workflows that trigger when main workflows fail

- Retry logic: Automatically retry failed executions with customizable intervals

- Debug mode: Step through workflows node by node to identify issues

- Execution history: Review past runs with full data snapshots

3. Advanced Features for Power Users

- Webhooks: Create custom endpoints to trigger workflows from external systems

- Scheduled triggers: Run workflows on cron schedules or at specific intervals

- Sub-workflows: Build modular, reusable workflow components

- Variables and expressions: Use JavaScript expressions for complex data transformations

- Split and merge operations: Process data in parallel and combine results

4. Security and Compliance

Critical for construction companies handling sensitive project data:

- Encryption: All credentials encrypted at rest

- Role-based access: Control who can view/edit workflows

- Audit logs: Track all changes and executions

- On-premise deployment: Keep all data within your infrastructure

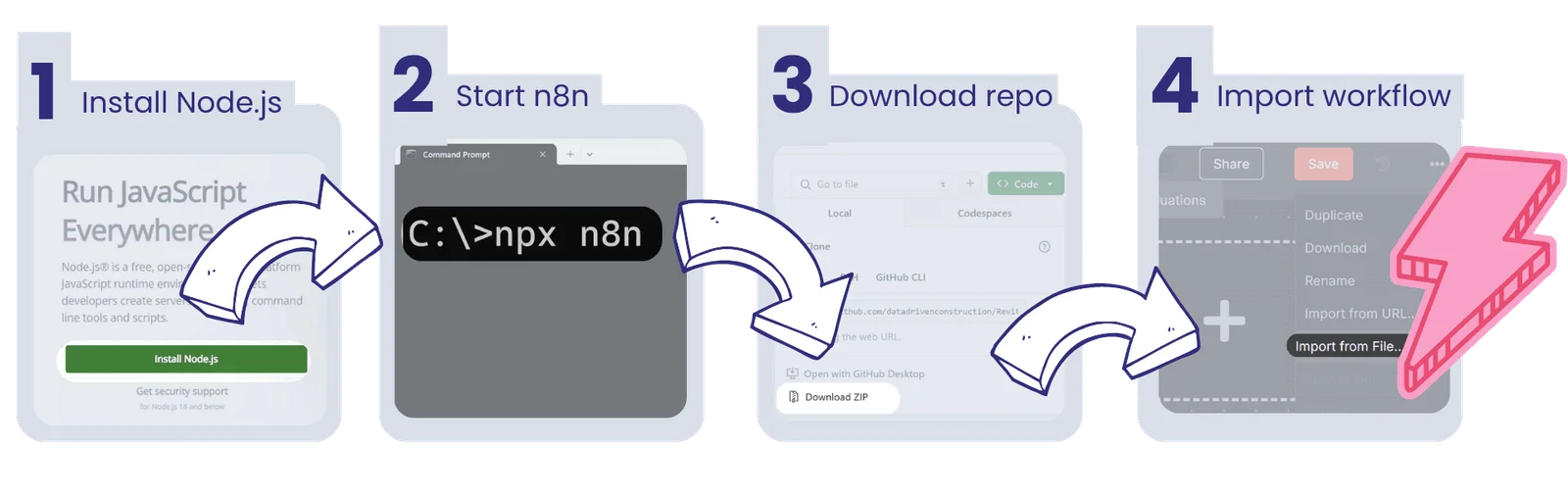

Quick Start: Install n8n in 3 Steps

1

Install Node.js (from the official site, takes 2 minutes)

2

Install n8n with a single command in CMD or PowerShell: npx n8n or npm install n8n -g

3

Open n8n in your browser at localhost:5678 and start building

Take ready-made pipelines and launch them

Video Tutorial: n8n Quick Start: Easy Installation & Pipeline Creation

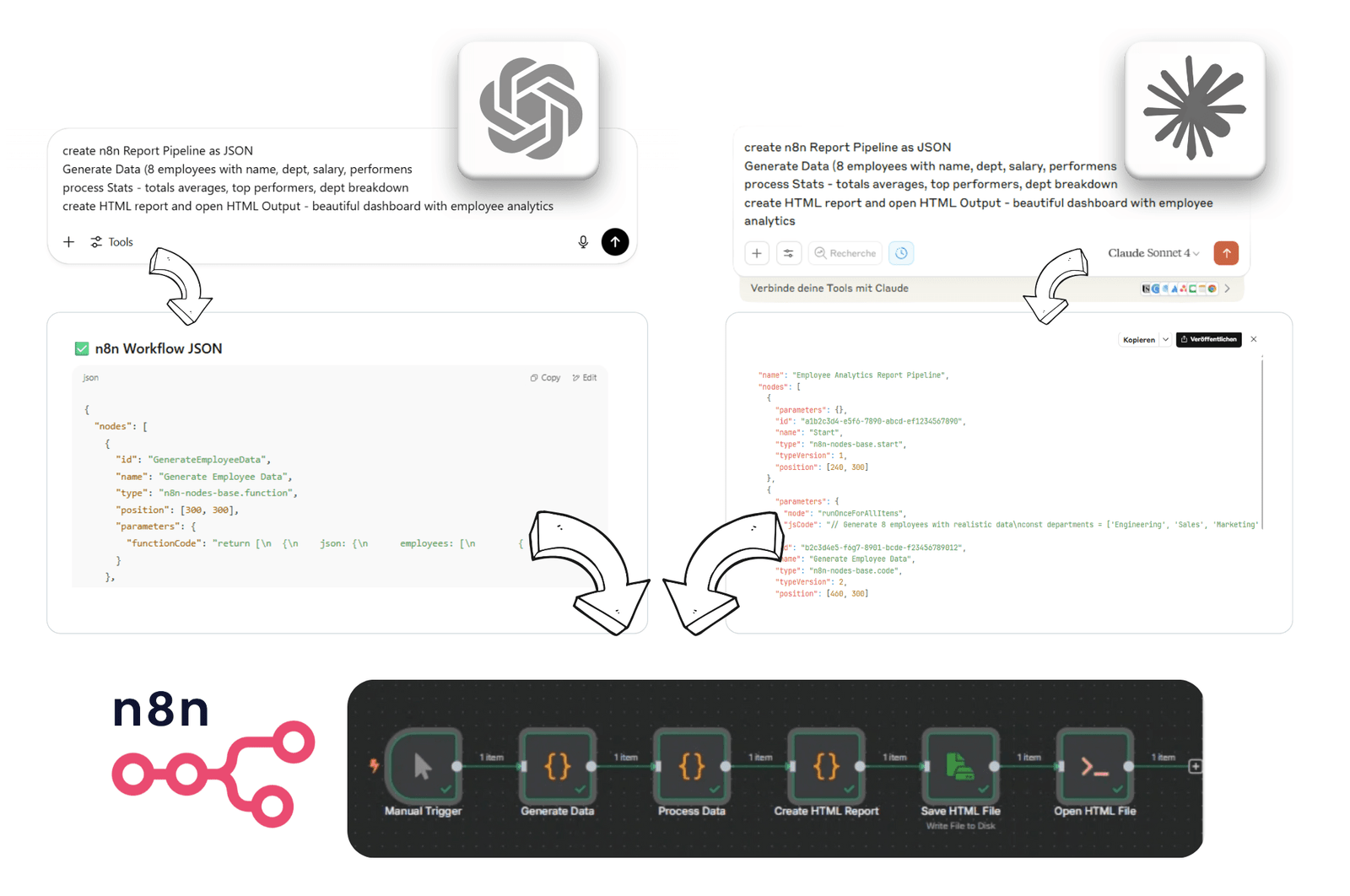

AI Integration: Next-Level Automation

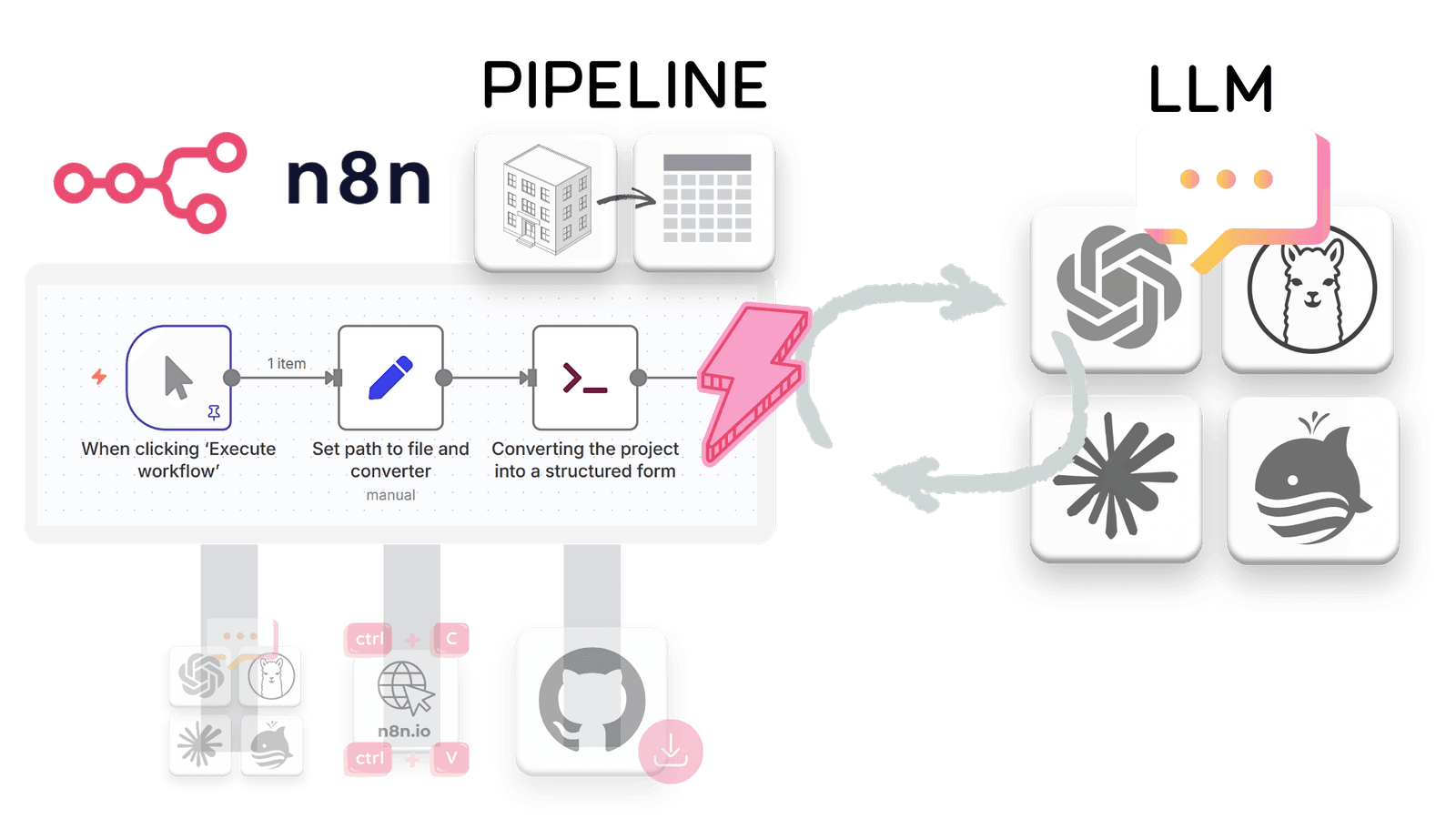

The game-changer is direct integration with AI tools like Claude and ChatGPT. For text generation, data analysis, or report creation, simply add the appropriate node and configure your prompt.

Example AI prompt:

"Create a JSON pipeline for n8n with the following logic: 1. Read files from a folder 2. Extract data from PDFs 3. Save to Excel with formatting 4. Send report via email with attachments"

Modern language models (Claude 4, ChatGPT 4.1, Grok 4) can generate production-ready n8n pipelines in minutes. Simply describe your required process logic.

AI Nodes Available in n8n:

OpenAI: ChatGPT, DALL-E, Whisper integration

Anthropic: Claude models for advanced reasoning

Google AI:

Gemini and PaLM APIs

Hugging Face: Open-source models

Local LLMs: Connect to self-hosted models via Ollama

⚡ DDC Pipeline Hub

Templates can be imported as JSON, adapted to specific needs, and run immediately. Automation becomes a tool for all professionals — from project managers to design engineers.

Data from CAD, BIM, CAFM, ERP, Excel, and cloud services start "speaking the same language" — routine processes disappear, human error is minimized.

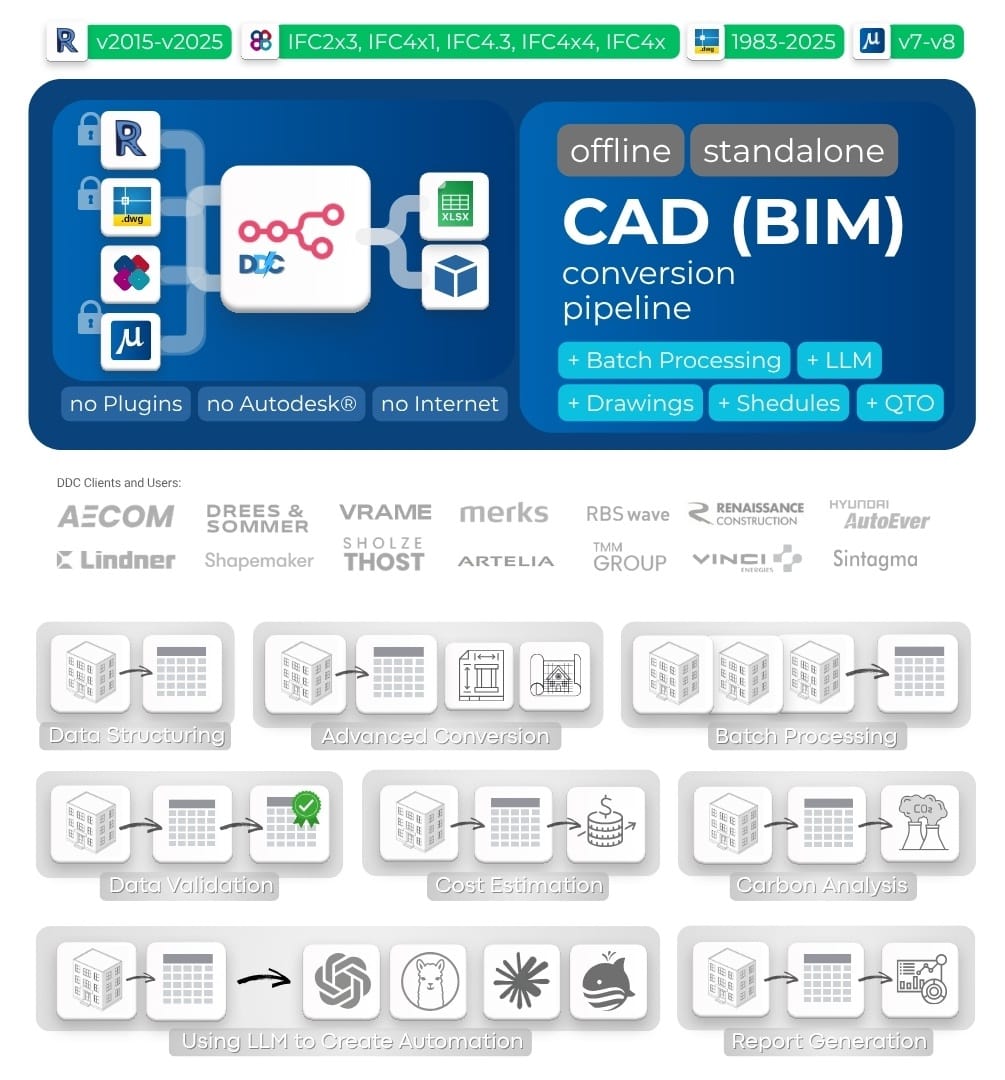

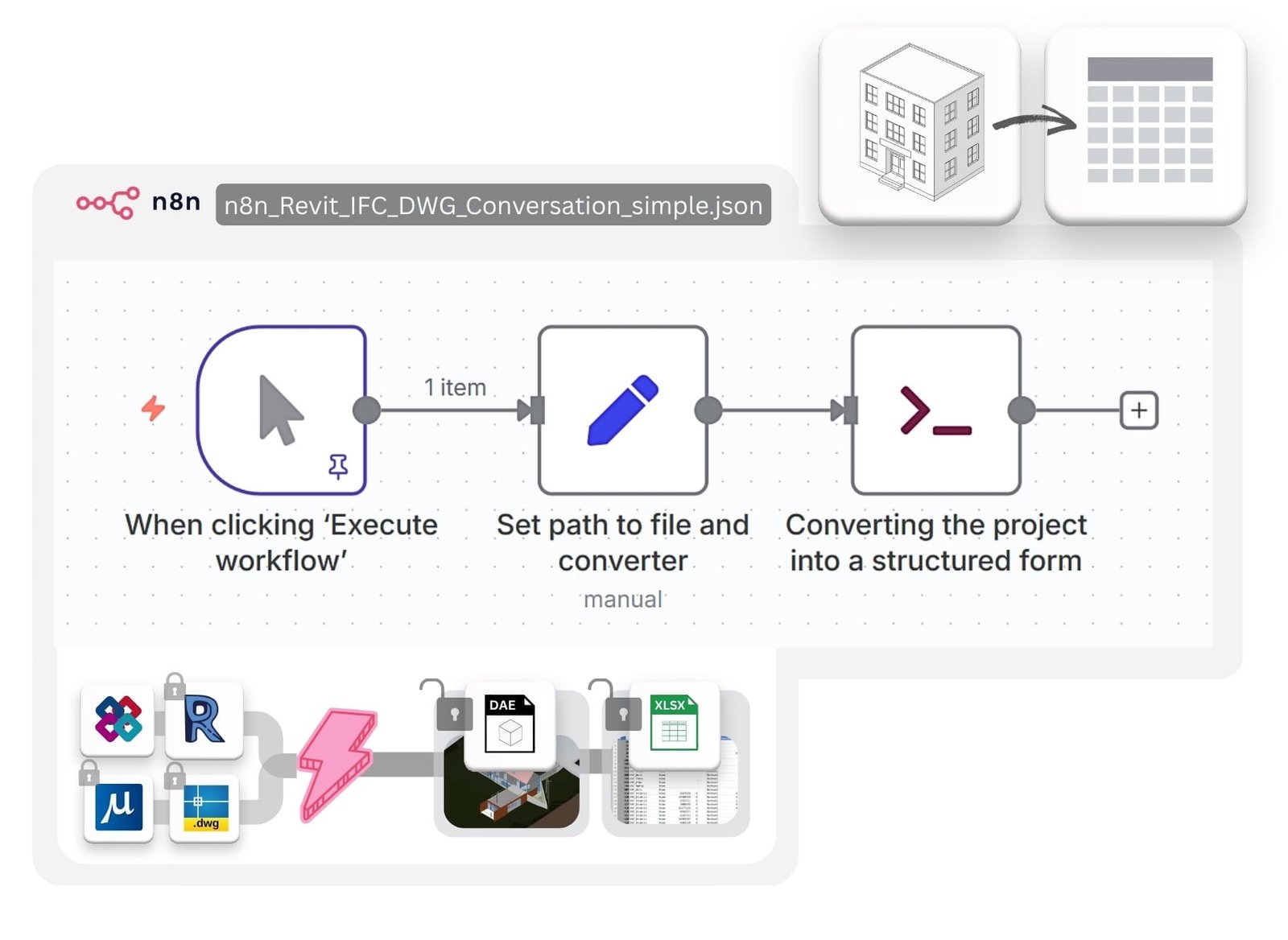

Practical Examples: CAD/BIM Process Automation

Let's explore ready-to-use solutions for automating CAD/BIM data workflows. All pipelines are available for download and can be deployed with minimal configuration.

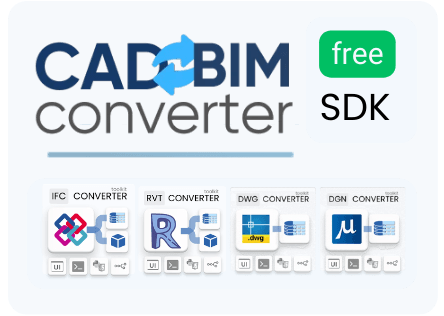

1. Data Conversion: Revit/IFC/DWG/DGN → Database + Geometry

This workflow converts .rvt, .ifc, .dwg, or .dgn files into an element database (.xlsx/.csv) and exports 3D geometry (.dae). Works locally without internet or Autodesk licenses.

To use: Import the JSON file, set converter executable paths and project file path, then click "Execute".

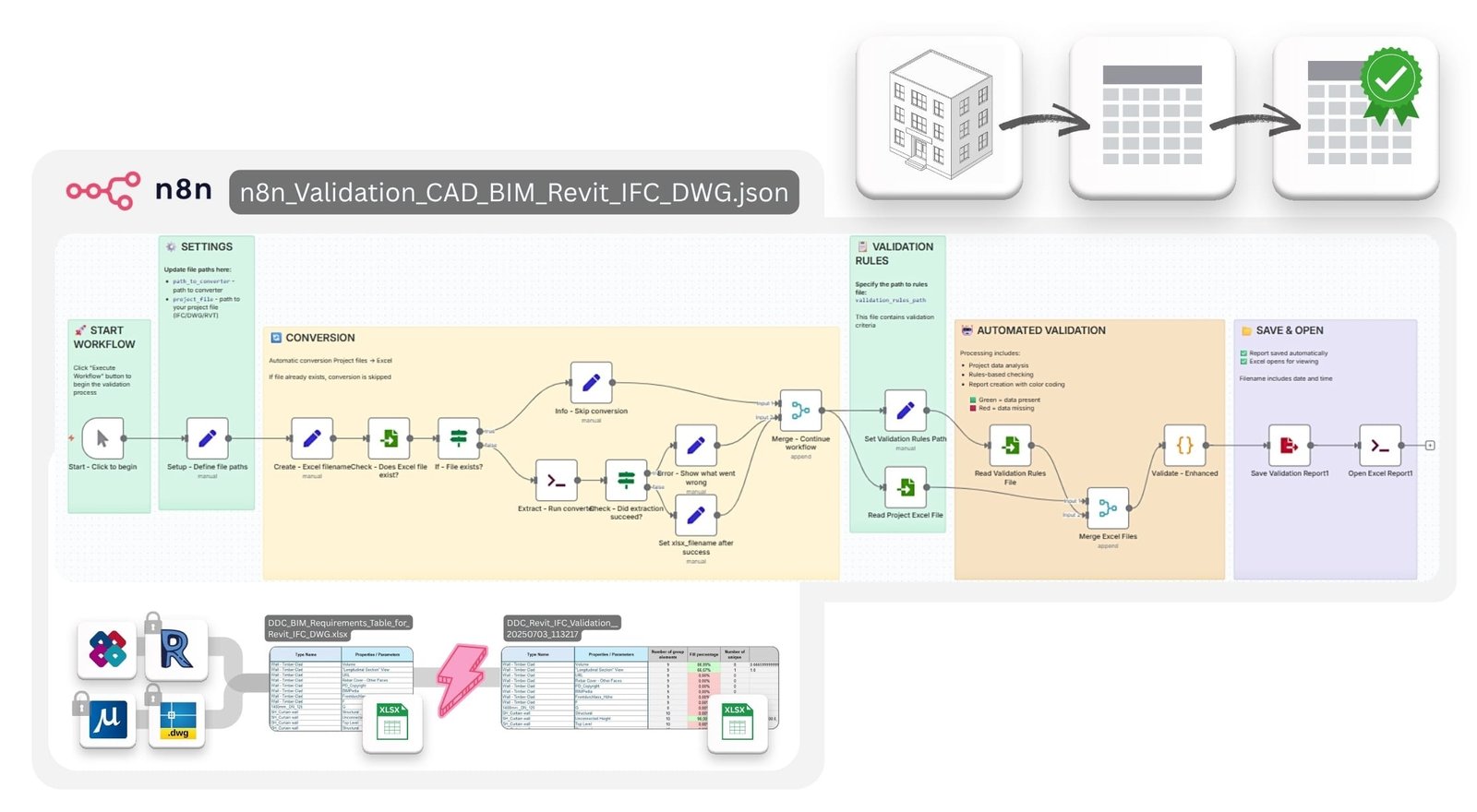

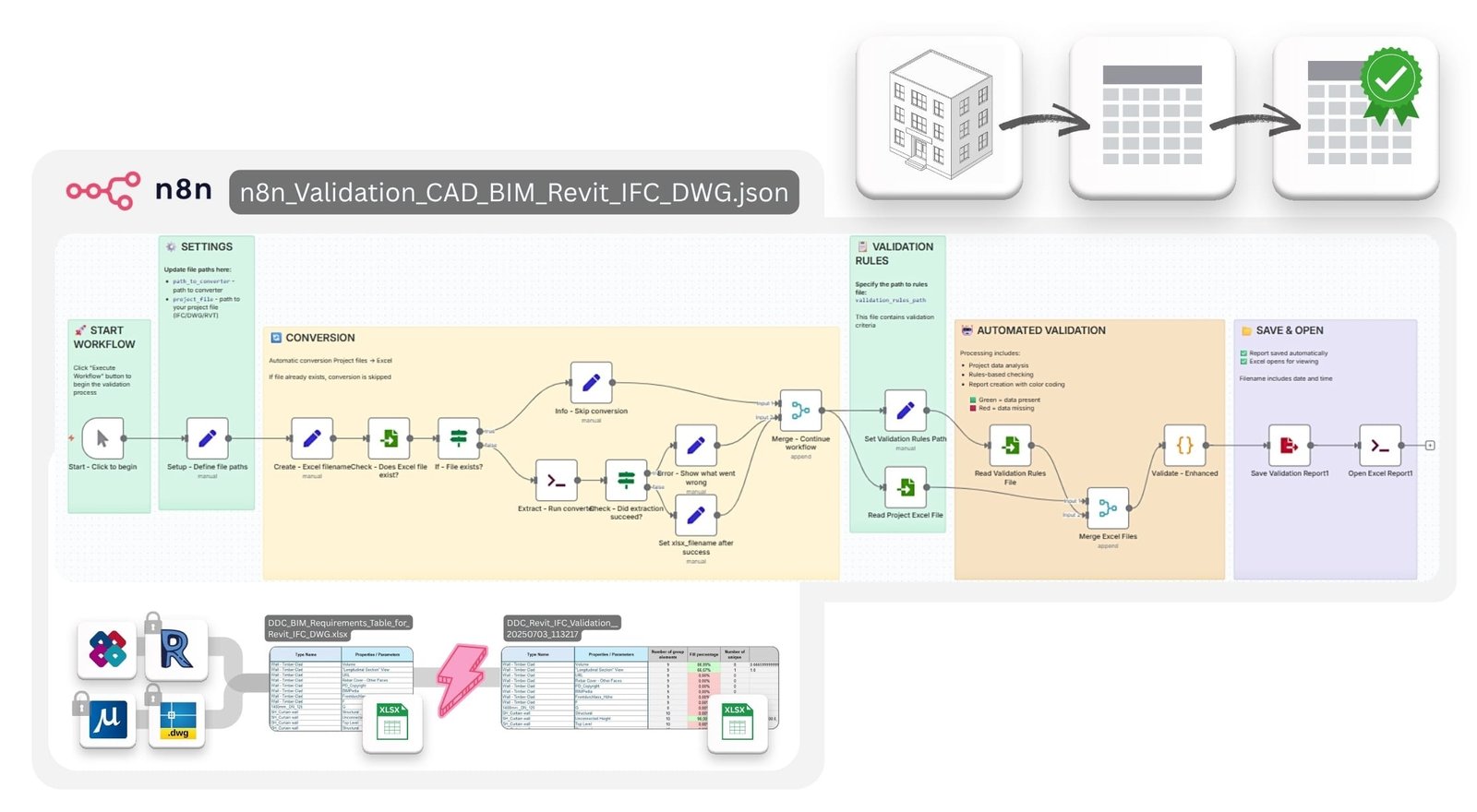

2. CAD/BIM Data Quality Validation

Pipeline reads CAD/BIM data, checks for missing or incorrect parameters by group, type, or other criteria. Creates validation report showing completion percentage and unique parameter values. Fully compliant with industry requirements like EIR, BEP, IDS.

To use: Import the JSON file, set converter executable paths and project file path, then click "Execute".

2. CAD/BIM Data Quality Validation

Pipeline reads CAD/BIM data, checks for missing or incorrect parameters by group, type, or other criteria. Creates validation report showing completion percentage and unique parameter values. Fully compliant with industry requirements like EIR, BEP, IDS.

To use: Import the JSON file, set converter executable paths and project file path, then click "Execute".

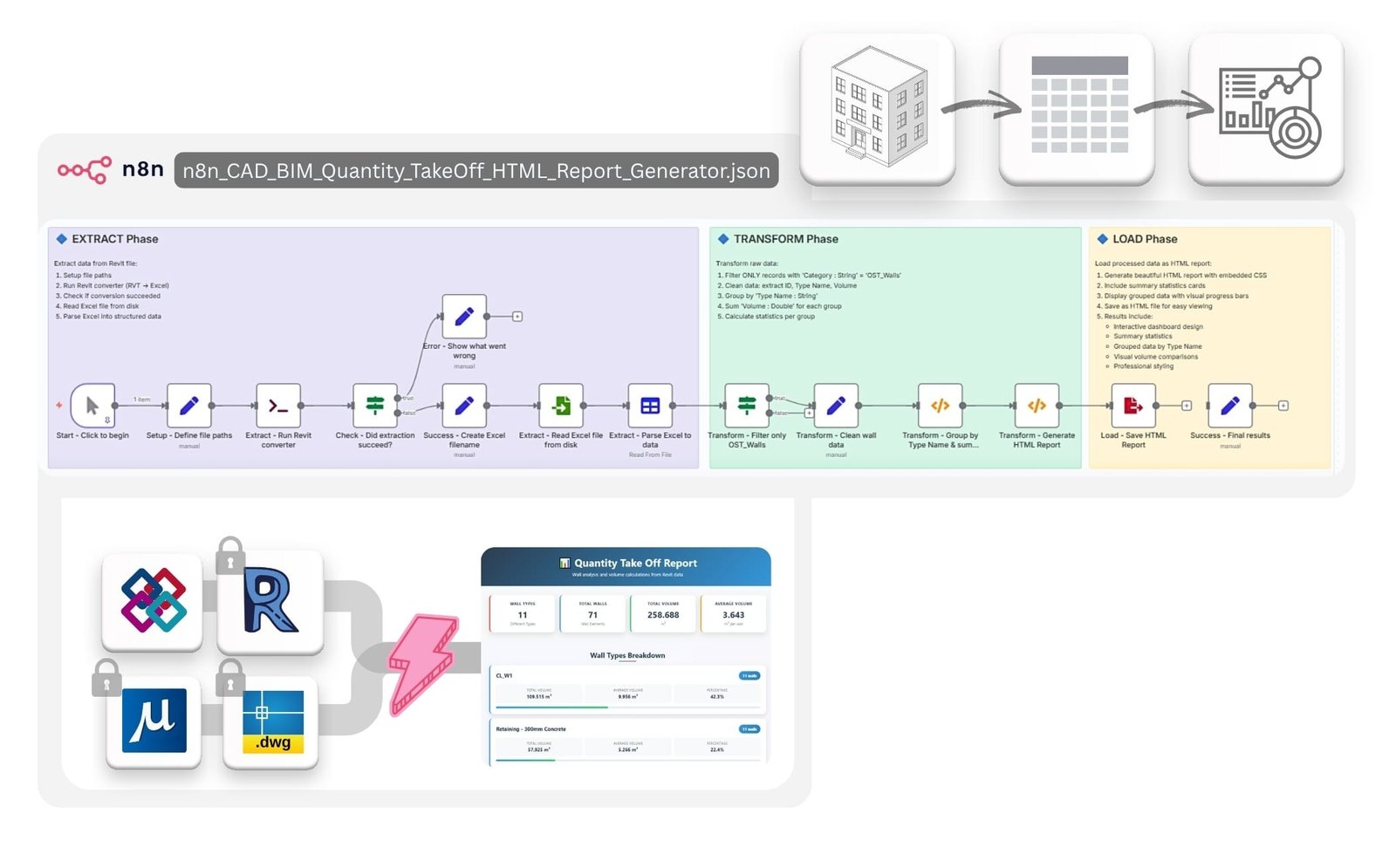

3. Quantity Takeoff & Report Generation

Automated calculation of volumetric parameters for selected element groups with HTML report generation. Perfect for quick grouping, calculations, and estimates.

To use: Import the JSON file, set converter executable paths and project file path, then click "Execute".

Auto Classification and Auto Mapping of Elements

Automate the process of classifying building elements (from Revit, IFC, DWG, DGN, etc.) into standardized categories and automatically map their attributes for further calculations (e.g., QTO, cost, carbon footprint).

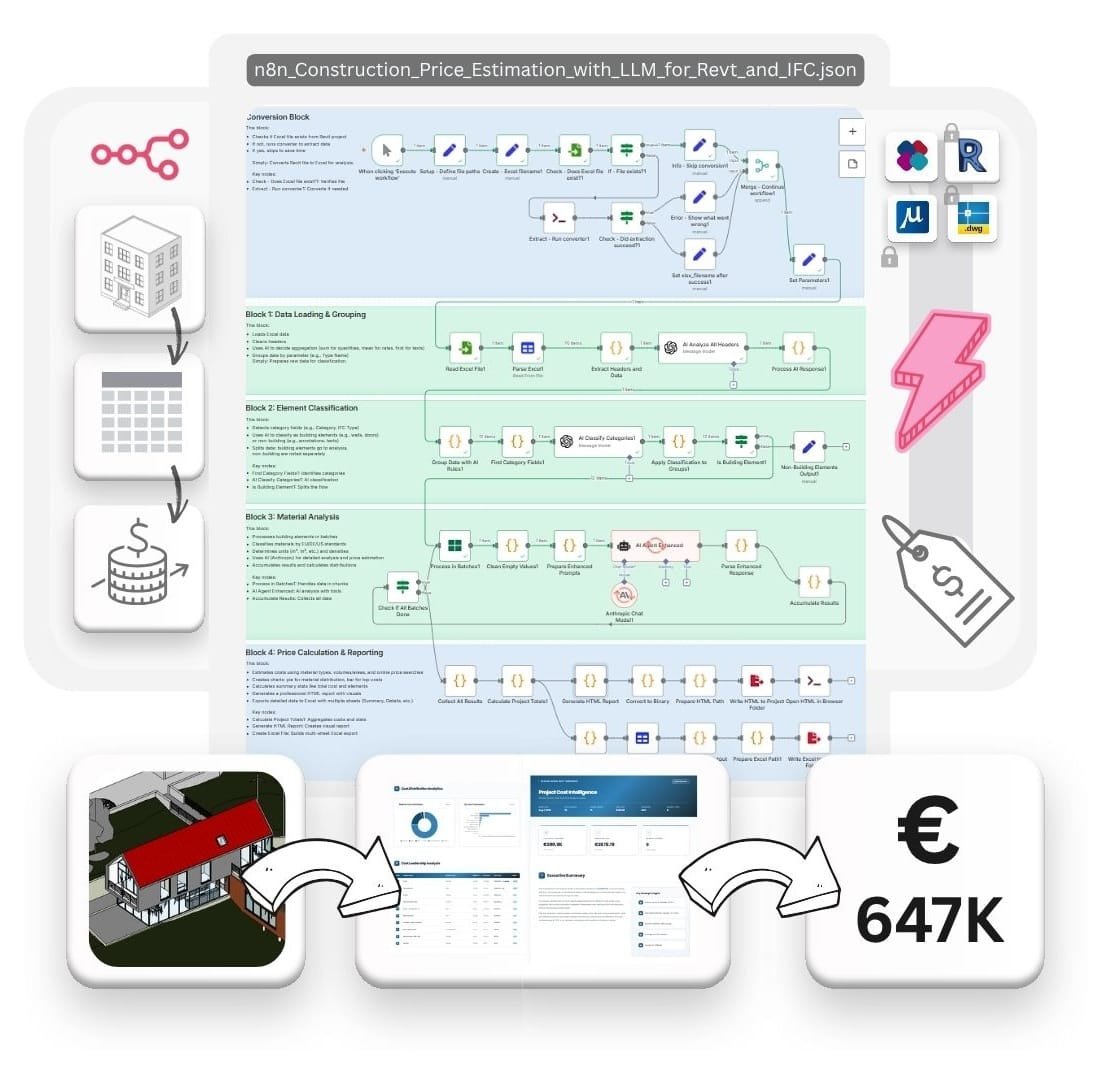

4. Construction Price Estimation Pipeline for CAD (BIM) with LLM (AI)

Automates cost estimation for building elements from CAD/BIM files. Uses AI to classify materials, search market prices, and generate comprehensive cost reports.

To use: Import the JSON file, set converter executable paths and project file path, then click "Execute".

Automated pipelines for classifying and recognizing building elements fundamentally change the approach to managing construction projects. In projects that require rough estimates of a project (cost, time, etc.) with an acceptable error margin, the need for rigorous manual element presence checks in workflows — tasks traditionally performed by BIM coordinators or BIM managers — is eliminated.

For classification purposes, these systems also eliminate the need for specialized personnel to apply classification rules to elements and groups, which is the primary responsibility of, for example, estimators. By automating these steps, workflows based on automated pipelines relieve people of routine and repetitive work, freeing up experts to solve more strategic tasks.

The world of workflow automation and artificial intelligence is changing the industry:

More and more specialists — from engineers to estimators — will use these digital tools. Instead of spending hours manually reviewing and mapping data, teams will work with automated systems, focusing on decision making and value creation rather than routine processing.

Workflows driven by automation and AI are not just tools. They are becoming an integral part of everyday activities, requiring new skills and opening up new opportunities for efficiency and innovation across the industry.

In case a company is not ready to operate or buy a comprehensive ERP system where processes are performed in a semi-automated mode, one way or another the company's management will start automating the company's processes outside the ERP systems.

In the automated version of the same ETL workflow, the overall process looks like a modular code that starts with processing data and translating it into a open structured form. Once the structured data is received, various scripts or modules are automatically, on a schedule, run to check for changes, transform and send messages.

The reasons why I work more and

more with ETL-pipelines are simple

Simon Dilhas

CEO & Co-Founder

Abstract AG

A few years ago Data-Driven Construction team showed me Jupyter Notebooks and I fell in love.

Since then I do simple data manipulations quicker with a few lines of code instead of manually clicking in Excel. As always it was a gradual process, first I automated repeating tasks. As my confidence and know-how grows, I do more and more.

Moreover, with the advent of Chat GPT, it became even easier, by describing the desired outcome I get the code snippets and just need to adapt them.

It's saves me a lot of time. E.g. I have a list of new sign-ups and I have my company CRM. Once a month I check if all the signups are in the CRM. Before this was a manual comparison of two different lists. Now it's a press of a button and I get the list with the new signups in the right format to import it back to the CRM. By investing 1h of programming I'm saving 12h of work per year.

I reduce failures dramatically. Because I did not like to compare two lists I did not do it as regularly as I should have done it. Moreover, I'm not good at comparing data, every phone call disrupted my work and I forgot to change a data entry. The consequences were that not everybody who should have gotten my newsletter got it.

I feel like a god when I program. The more complex work becomes the less direct control over the results I have. But when programming I'm in absolute control of the result. I write a few lines of code and the computer executes them exactly as I tell him. When the result is not as I expected it to be, it's usually because of a logical shortcut on my side, the computer does exactly what I tell him.

WHAT DOES THE PROCESS

ETL-PIPELINE LOOK LIKE IN CODE?

Manual process ~5 minutes

Pipeline runtime: ~5 seconds

1. Extract: data opening

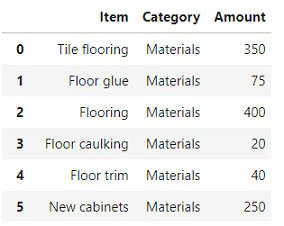

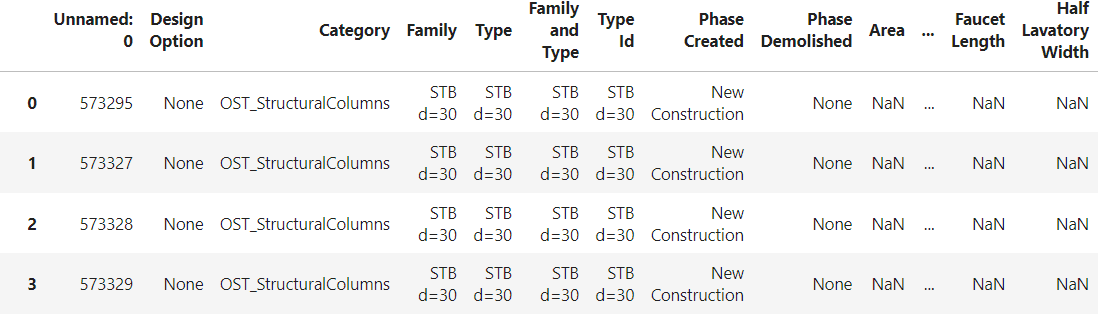

# Importing the necessary libraries # for data manipulation and plotting import pandas as pd import matplotlib.pyplot as plt # Address to the file received after conversion DDCoutput_file = file_path[:-4] + "_rvt.xlsx" # Reading the Excel file into a DataFrame (df) df = pd.read_excel(DDCoutput_file) # Show DataFrame table df

2. Transform: grouping and visualization

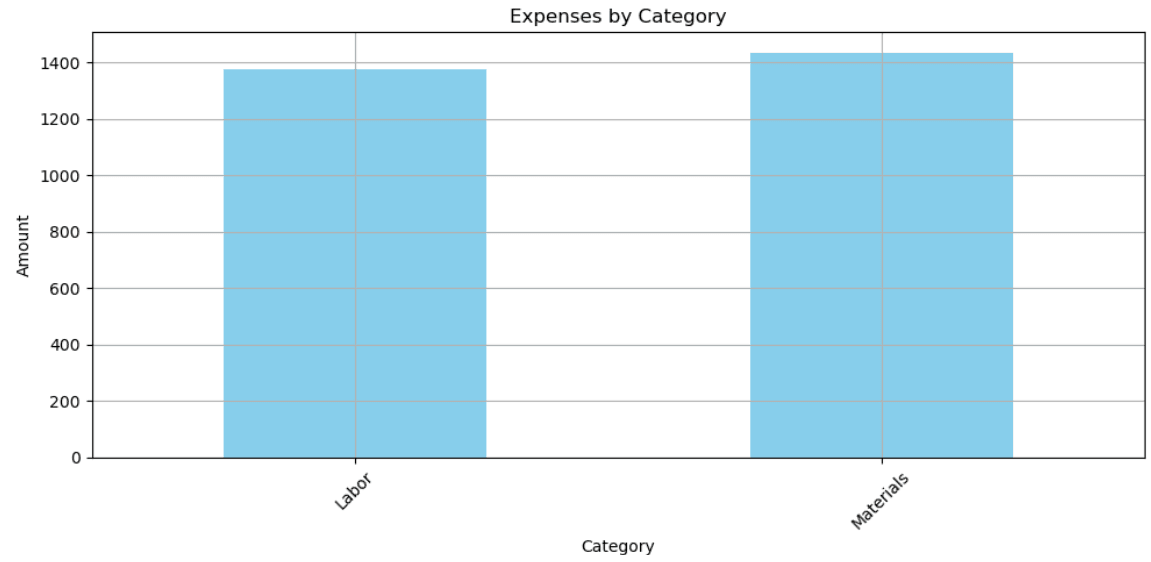

# Grouping by 'Category', summing

# the 'Amount', plotting the results, and adjusting the layout

ax = df.groupby('Category')['Amount'].sum().plot(kind='bar', figsize=(10, 5), color='skyblue', title='Expenses by Category', ylabel='Amount', rot=45, grid=True).get_figure()

3. Load: export

# Specifying the path for saving # the figure and saving the plot as a PNG file file_path = "C:DDCexpenses_by_category.png" plt.savefig(file_path)

Manual process ~20 minutes

Pipeline runtime: ~20 seconds

1. Extract: data opening

import converter as ddc import pandas as pd # Address to the file received after conversion DDCoutput_file = file_path[:-4] + "_rvt.xlsx" th[:-4] + "_rvt.xlsx"

# Importing Revit and IFC data df = pd.read_csv(DDCoutput_file ) df

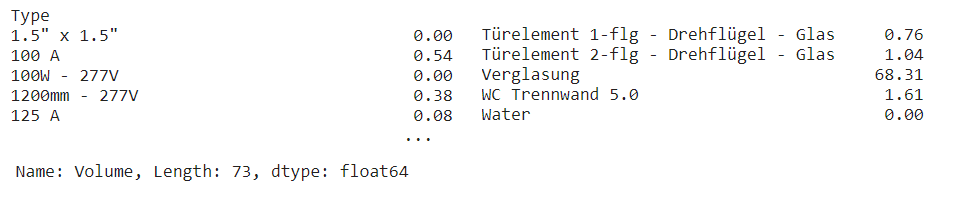

2.1 Transform: grouping and visualization

# Grouping a Revit or IFC project by parameters

dftable = df.groupby('Type')['Volume'].sum()

dftable

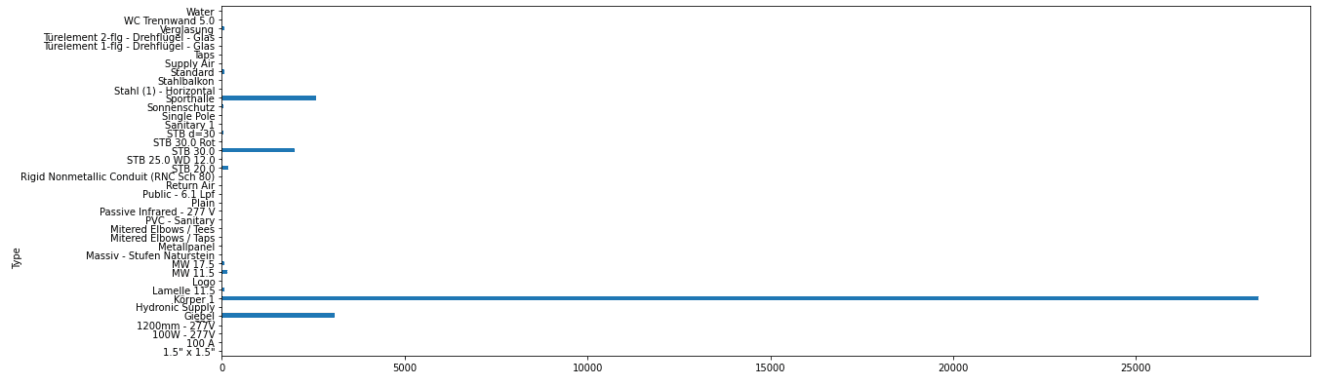

2.2 Transform: displaying

# Displaying a table as a graph

graph = dftable.plot(kind='barh', figsize=(20, 12))

# Save graph as PNG

graphtopng = graph.get_figure()

graphtopng.savefig('C:DDC_samplegraph_type.png', bbox_inches = 'tight')

3 Load: export

# Installing the library that allows generating PDF documents

!pip install fpdf

from fpdf import FPDF

# Creating a PDF document based on the parameters found

pdf = FPDF()

pdf.add_page()

pdf.set_font('Arial', 'B', 16)

pdf.cell(190, 8, 'Grouping of the entire project by Type parameter', 2, 1, 'C')

pdf.image('C:DDC_samplegraph_type.png', w = 180, link = '')

# Saving a document in PDF format

pdf.output('Report_DataDrivenConstruction.pdf', 'F')

Local Automation Advantages

All workflows run locally — no internet, cloud services, or CAD/BIM licenses required. Output data is structured (SQL, Excel, HTML, JSON), simplifying further analytics and automation.

Key benefits:

Complete data control

Security compliance

Independence from external services

Customization for company-specific needs

Start Simple:

Choose one routine task — like extracting email attachments or grouping Excel data

Use existing templates — the n8n library contains over 3,000 proven solutions

Iterate improvements — start with a basic version and gradually add functionality

Statistics and Trends

In 2024, the number of published workflows in n8n's public marketplace exceeded 3,292. According to the company, their user base doubles every 9-12 months.

According to McKinsey Global Institute research, construction remains one of the least digitized industries in the global economy, resulting in productivity losses of 20-30% of potential. For companies, this represents both a warning and an opportunity.

Install n8n: Time to Act

n8n represents a new generation of automation tools making complex integration projects accessible to companies of any size. For the construction industry, this means significantly improved operational efficiency without major IT infrastructure investments.

Automation is no longer a privilege of technical specialists. With tools like n8n, anyone can build and run real workflows — saving hours, reducing errors, and focusing on what truly matters.

In an environment of growing competition and shrinking margins, companies that first harness the potential of modular automation will gain significant advantages in project speed, process quality, and overall operational efficiency.

Additional Resources

DataDrivenConstruction Book available in 32 languages. Discover practical automation cases and open data standards: 🔗datadrivenconstruction.io/books

💬 Telegram Community for discussing new pipelines and automation use cases: 🔗t.me/datadrivenconstruction

📰 Related Articles:

🔗The Post-BIM World: Transition to Data and Processes

🔗The Struggle for Open Data in Construction Industry

Open data and formats will inevitably become the standard in the construction industry — it's just a matter of time. This transition will accelerate if the professional community actively spreads information about open formats, database access tools, and SDKs for reverse engineering.

♻️ If you found this information useful, share it with colleagues. Subscribe for updates at 🔗DataDrivenConstruction, on 🔗LinkedIn, and 🔗Medium